The Voxco Answers Anything Blog

Read on for more in-depth content on the topics that matter and shape the world of research.

Text Analytics & AI

How Text Mining Impacts Business

How much of your business data is sitting around unused? You may have many ways to work with structured data, but your unstructured data gets overlooked due to the difficulty involved in the process. Relying on structured data alone means that open-ended comments and responses don’t factor into your reports and business goals. You could overlook opportunities, miss out on valuable customer feedback, and fall behind on optimizing the customer experience. Text mining fixes this problem through a variety of helpful capabilities that makes the most out of verbatim comments and other unstructured data.

Benefits of Text Mining

The importance of text mining can not be underestimated. Text mining delivers significant value to your business, as you’re better able to harness the insights that are hidden in your current and future data sets. Here are the benefits your company can realize by adopting text mining solutions, from coding to visualization.

Delve Into Your Unstructured Data

You don’t get a lot of value out of open-ended comments that are discarded due to a lack of processing power. Text mining empowers your organization to quantify this information. Rather than getting bits and pieces of the sentiment behind comments, you gain visibility.

Optimize the Experience

Without data from your open-ended feedback and other verbatim comments, you’re not able to fully optimize experiences for users, customers, clients, employees, and other stakeholders. While you can make some improvements based solely on structured data, you can better understand what stakeholders want and how they react to changes with text mining.You don’t want to miss the mark with customer experience optimization, especially in competitive marketplaces. Keep your customer satisfaction high by fully understanding where they’re coming from, what they’re feeling, and what they want going forward.

Generate Reports Faster

Automated processing of many parts of the text analysis means that you don’t have to pour countless resources into the project. Coding, sentiment analysis, and other time-consuming parts of text mining get handled through the software itself, with the help of Natural Language Processing and other artificial intelligence tools.

Create Custom Views of Your Data

Cut through the signal to noise ratio by putting together custom dashboards with the most important information. Each person involved in the project can have their own view of the data to better inform their particular position. People who are not data scientists appreciate the useful visualizations and easy access to relevant data that’s provided in these types of dashboards.

Increase Productivity Through Automation

Manual, time-consuming processes can lead to employee disengagement, especially if you’re working with data scientists and other highly skilled data professionals. Not only is the productivity improved through a faster text mining method, but you also get better use out of your staff and their work hours.

Gain a Single Version of Data Truth Through Integration

Siloed data is an enemy to accurate data and reporting. If your unstructured data is spread throughout multiple platforms, machines, spreadsheets, and other files, then it’s possible that people are working on different versions of that information. Text mining tools that have native integration for pulling data sources into the platform, as well as those with APIs that make it simple to work with your sources, consolidate all of this information in one place. You won’t have version control issues and decreased data quality concerns.

Automating Translations

Global companies may receive feedback in multiple languages. If you manually translate these verbatim comments, then you’re adding a lot of time to the process. Text mining tools can automate the translations and have the capability to understand multi-lingual sentiments.

How Text Mining Improves Decision Making

Rather than guessing at why your audience picked the scores that they did on a survey, you can evaluate their verbatim comments to open-ended questions.Sometimes your audience will surprise you with unexpected use cases and feedback. This will help you identify new opportunities and markets, as well as better serve your customers and other stakeholders.If you roll out a new product, service, or campaign and there are problems with it, customer feedback also helps you speed up your reaction time to these issues. This benefit also allows you to make fast decisions when you’re running tests or putting new initiatives in place.Data-driven decision making is essential for getting buy-in in organizations. When you can point to hard data that backs up the need for improvements, changes, and other initiatives, you’re better able to make your case with your bosses.

Examples of Text Mining

Text mining comes in many forms, depending on your business needs. It’s a flexible technology that adapts to your goals in the short and long-term. You may use one or more sources for unstructured data, which will now deliver many insights that were previously inaccessible due to the difficulty of manually processing this information. Some text mining solutions also work with structured data so you can perform both types of analysis within the same software. Here are examples of tests, surveys, and other research that benefit from text mining technology.

- Net Promoter Score®

- Advertising campaign tests

- Voice of the customer

- Ad creatives tests

- Concept testing

- Satisfaction testing of customers, employees, clients, patients, and others

- Survey and social media comments

- Customer support logs

- Trouble tickets

Text mining these unstructured data sources is helpful in many parts of your organization. With companies becoming focused on the customer experience and employee engagement, sentiment analysis becomes a necessary part of your critical business infrastructure. Continual improvement is what sets companies apart from one another in the modern business world, and it’s difficult to achieve that without considering open-ended survey responses. Make the most out of your company’s data and achieve the competitive edge that’s needed to take you through 2020 and beyond. Incorporate text mining software into your analysis workflow and enjoy the power and flexibility it delivers.Net Promoter®, NPS®, NPS Prism®, and the NPS-related emoticons are registered trademarks of Bain & Company, Inc., NICE Systems, Inc., and Fred Reichheld. Net Promoter ScoreSM and Net Promoter SystemSM are service marks of Bain & Company, Inc., NICE Systems, Inc., and Fred Reichheld.

Read more

Text Analytics & AI

Example Market Research Questions That Teach You What to Ask & Why

You can have the most advanced market research solution in the world, but it won’t be effective if you fail to ask the right questions. The example market research questions featured below will give you a starting point when determining what you should ask your audience and internal stakeholders.

Questions to Ask Consumers

When you send out customer satisfaction surveys, Net Promoter Score surveys, and other initiatives to collect feedback, these questions provide a wealth of market research data.

Basic Demographic Information

Questions that fall under this category include the person’s age, household income, their job title, location, and other standard demographic data. You want to ask questions in this category so you can segment your buyers and learn more about the differences between each group.

Would You Recommend Us to Your Friends and Family?

This question is typically used when you’re conducting Net Promoter Score surveys. You can discover your customer advocates, detractors, and those in-between.

Are You a New Customer or a Returning One?

Gain a better understanding of whether you are retaining customers and driving repeat sales, or if you’re bringing in a higher proportion of people who are new to your brand.

How Long Have You Been Purchasing From Our Company?

If you have repeat customers, you want to get an idea of their lifetime value based on how many purchases they have made and approximately how many purchases they will make in the future. When you build up a highly loyal, long-term audience, you end up in a good position for sustainable business growth.

What Do You Do in Your Free Time?

You can learn a lot about your audience by discovering their hobbies and interests. Discover opportunities that you haven’t considered and new products and services that fit into these areas.

What Sources Do You Use to Learn About New Products and Services?

Do you know where buyers get pre-sales information from? You can identify weaknesses in your content marketing strategy and other parts of the sales funnel with this question. You may need to improve the resources available on your website and collaborate with leading publications and websites in your market.

How Do Our Products and Services Solve Your Problems?

You learn more about common use cases and can potentially identify problems that you haven’t considered. Your audience may end up using your offerings in unexpected ways, leading to new releases and features. They could also use help with the areas that your products overlook. Fill in the gaps and give your customers what they’re really looking for.

What is the Biggest Challenge You Face in Your Life?

Learn more about what challenges your audience faces on a day-to-day basis. You can make improvements to address these areas or move into new markets.

Why Did You Buy Our Products and Services Over a Competitor’s?

Buyers had some reason that they chose your company over others in the market. Find out what that is, whether it matches up to your unique selling proposition, and how you can capitalize on these competitive advantages.

What Do You Enjoy the Most and the Least About Our Products and Services?

Discover your standout features as well as those that aren’t meeting customer expectations.

What Features Would You Like to See Added to Our Products and Services?

Customer feedback can be a great way of putting new features on the roadmap.

How Would You Rate Your Last Experience With Our Brand?

While the overall customer experience with your brand is important, you also want to learn more about each touchpoint. You can discover customer experience trends, dig deeper into isolated bad experiences, or those that point to a need for improvement.

Questions to Ask Internally

Market research and competitive analysis also require asking a number of internal questions. By using customer and internal surveys, you develop a complete picture of the customer experience and engagement of both sets of stakeholders.

What Does the Competitive Landscape Look Like?

This question provides an open-ended opportunity for employees to discuss the marketplace, movers and shakers in the industry, significant product and service releases, changes in technology, business models, and other details that impact your sales potential.

Do Our Current Buyer Personas Serve Our Sales, Marketing and Product Development Needs?

Your audience changes over time and your buyer personas should do the same. If you try to stick with the same set of personas as when you first started your business, you fall behind the competition and create mismatched expectations.

What Products, Services, or Features are Customers Using More Than You Anticipated?

Sometimes products take off unexpectedly, whether they achieve viral success or they become more widely adopted than predicted. You can gain more information on which products are seeing high usage, whether you need to allocate more resources to those offerings, and how you can use the momentum to secure a competitive advantage.

How Does Our Customer Experience Match Up to the Competition?

The customer experience is a critical factor in gaining and growing your customer base. If you don’t know what your competition is doing and how you match up, then you won’t be able to optimize your operations to beat them. Pay close attention to the expectations that leading companies set in your marketplace, if you don’t already hold one of the top spots.Each customer brings a set of expectations with them when they interact with one of your touchpoints. If you build your sales funnel based on a strong understanding of the typical experience, you can improve on it.

How Can You Adapt to the Changing Needs of Consumers?

The customers you serve today may be significantly different than those who come years later. As the marketplace and technology change, you need to adapt to stay relevant. Have a plan in place to accommodate these needs. Your business processes and infrastructure should be flexible enough to evolve over time

Read more

How to Choose the Right Solution

A Short Guide to CAPI Survey Software

Incorporating a live interviewer in your survey strategy is a tactic researchers have used for years. Yet, it remains a useful practice for collecting meaningful data from respondents today.

However, what is being done to experiment with the flexible capabilities of CAPI technology – to approach face-to-face surveys uniquely?

Advanced CAPI software can be a powerful tool that opens the door to dozens of new uses. Think of personal interviewing software that empowers teams to access more respondents from a wider sample.

In this blog, we’ll dive deeper into the concepts of CAPI survey software and learn how it is a significant tool in data collection.

Let’s begin with the basics.

What is a CAPI survey software?

CAPI stands for ‘Computer Assisted Personal Interviewing’, in which an interviewer enters survey questions onto a tablet or phone during the interview.

CAPI survey software is a tool that enables it. It is like any other survey tool, for example, online survey tools, IVR, etc.

What are the advantages of using CAPI survey software for data collection?

Using CAPI survey software for research has several benefits, some of which are:

Efficiency and Accuracy: CAPI software can help interviewers administer surveys more efficiently and accurately. The software can guide interviewers through the questionnaire, ensuring that questions are asked in the correct order and responses are recorded accurately.

Real-time Data Collection: Data collected through CAPI software can be instantly transmitted to a centralized database. This eliminates the need for manual data entry, reducing the chances of errors and allowing for faster data analysis.

Complex Question Logic: CAPI software can handle complex skip patterns, branching, and routing in questionnaires. Depending on a respondent’s answers, the software can automatically skip irrelevant questions or guide the interview in a specific direction.

Multimedia Integration: CAPI software can incorporate multimedia elements, such as images, videos, and audio clips, to enhance the survey experience and gather more detailed responses.

Data Validation: CAPI software can include built-in data validation checks. This helps ensure that respondents provide valid and consistent responses by flagging or correcting errors in real time.

Offline Capabilities: Some CAPI software can operate offline, allowing interviewers to collect data in areas with limited or no internet connectivity. Once a connection is available, the data can be synchronized with the central database.

Security: CAPI software can implement security measures to protect sensitive data. Encryption and access controls can be implemented to safeguard both respondent data and the survey itself.

Monitoring and Supervision: Supervisors can remotely monitor interviews in progress, providing support to interviewers if needed. This can lead to better quality control and consistency in data collection.

Remote Administration: In cases where face-to-face interviews are not possible, CAPI software can enable remote data collection through video conferencing, allowing interviewers to administer surveys virtually.

Customization: CAPI software often allows researchers to customize the survey layout, design, and branding to match their needs and preferences.

What are some of the most popular CAPI survey software use cases?

CAPI survey software can be used for a variety of purposes. Here are some of the most popular use cases of CAPI survey software:

1. Frontline for Respondents

Let’s begin with a more straightforward use case for the software: CAPI surveys can act as the first gateway to deeper data from engaged respondents down the line. For example, a quick in-person survey can lead to a follow-up self-complete survey and can eventually lead to an invite to join a curated panel.

When using CAPI as an ingredient in a richer multi-channel survey system, personal interviewing software can be used as a first step to a longer, more engaged path to getting quality insights from respondents in many forms.

2. Elderly Needs Assessment Surveys

As we age, it becomes challenging for some to remain independent in their homes. Healthcare researchers, government, and home care services often use CAPI software to collect needs assessment surveys and collect data.

The health care professionals conduct an in-house computer-assisted personal interview with elderly respondents and their caregivers to evaluate living situations and health needs. With CAPI insights and a resulting assessment, elderly and disabled individuals can be supported with a customized care plan.

3. Multilingual Self-Completion

Connecting with tourists worldwide can be difficult, as there is a hurdle of language for interviewers to overcome with international respondents.

However, the insights collected at these locations are incredibly important; they can allow market researchers and tourist boards to make informed decisions about what’s driving the local tourism economy. An effective CAPI tool should allow interviewers to seamlessly change the language of the survey and turn the device towards the respondent for a direct answer.

4. Live Event Dashboards

File under “another unexpected use case”: CAPI tools can be used for fun and engaging live results displays at events, whether a tradeshow or conference.

At these events, organizations can incorporate interesting questions into a face-to-face interview. As interviewers chat with event attendees, the responses to the questions are synchronized via Wifi, with the results on a live display.

The results start meaningful conversations between interviewers and respondents and encourage participation in further surveys later on. Not to mention, this tactic will drive brand awareness in its uniqueness!

The many uses of CAPI software are not necessarily limited to those mentioned above!

Whether you’re using CAPI software for the tried and true purpose of in-person fieldwork or something a little different, working with these outreach tools will streamline your data collection processes and allow you to further become an information leader within your organization.

For those interested in broadening their toolkit, discover other market research tools that can complement and expand upon the capabilities of CAPI software.

What are the limitations of CAPI survey software?

Even though Computer-Assisted Personal Interviewing (CAPI) survey software offers various advantages for data collection, it also has its limitations. Here are some potential limitations of using CAPI survey software:

Technical Requirements: CAPI software requires electronic devices (such as tablets or laptops) for data collection. This can be a limitation in areas with limited access to such devices or where respondents are uncomfortable with technology.

High Cost of Implementation: Implementing CAPI software requires an initial investment in hardware (devices for interviewers and respondents) and software licenses. Additionally, ongoing costs may be associated with software maintenance, updates, and technical support.

Requires Training: Interviewers must be trained to use the CAPI software effectively. This training can take time and resources, and there may be a learning curve for interviewers unfamiliar with technology.

Data Security and Privacy Concerns: Storing sensitive respondent data on electronic devices raises concerns about data security. Proper encryption, data storage, and privacy protocols must be in place to prevent unauthorized access or data breaches.

Digital Divide: CAPI surveys assume that respondents are comfortable with technology and have access to devices and the internet. CAPI may exclude certain populations from participating in regions or communities with limited technological infrastructure.

Interviewer Bias: Even with CAPI software, interviewers can still introduce bias through their tone, body language, or behavior. Additionally, interviewers might inadvertently influence respondents during the survey.

Response Authenticity: In face-to-face interviews, there may be challenges in verifying the authenticity of responses. Interviewers might be unable to determine if respondents provide accurate information, which could affect data quality.

Complexity: While CAPI software can handle complex skip patterns and branching, designing and implementing these features can be time-consuming and prone to errors if not set up correctly.

Technical Glitches: Electronic devices and software can experience technical glitches or

malfunctions during interviews, leading to data collection disruptions or data loss.

Limited Interaction: CAPI surveys might limit the scope for open-ended responses or in-depth qualitative data collection compared to other survey modes like face-to-face interviews or focus groups.

Cultural and Language Barriers: CAPI surveys might not be suitable for populations with diverse languages, dialects, or cultural backgrounds, especially if the software doesn’t support these variations effectively.

Limited Access to Internet: While some CAPI software can work offline, certain features or functionalities might require an internet connection, limiting data collection in areas with poor or no connectivity.

Lack of Non-verbal Cues: In face-to-face interviews, interviewers can gather insights from respondents’ non-verbal cues. CAPI surveys may miss out on these cues, potentially affecting the interpretation of responses.

Sample Bias: The use of electronic devices may lead to a bias in the sample, as respondents who are more comfortable with technology might be overrepresented.

Considering these limitations is important when deciding whether to use CAPI survey software for data collection. Depending on the research context, some of these limitations might be mitigated with careful planning, training, and adaptation of the survey methodology.

How to Use CAPI survey software?

Using Computer-Assisted Personal Interviewing (CAPI) survey software involves several steps, from designing your survey to collecting and managing data. Here’s a general guide on how to use CAPI survey software:

Choose a CAPI Software: Research and select a CAPI software that suits your research needs. Look for question logic, multimedia integration, offline capabilities, and data security features.

Survey Design: Design your survey questionnaire using the software’s interface. Create questions, add response options, set skip patterns, and include multimedia elements like images or videos.

Device Setup: Set up the devices (tablets or laptops) that interviewers will use to administer the survey. Install the CAPI software on these devices and ensure they’re properly configured.

Training: Train your interviewers on how to use the CAPI software. Ensure they understand the questionnaire flow, know how to navigate the software, and any troubleshooting steps.

Pretest: Conduct a pretest or pilot study to identify any issues with the survey design, question logic, or software functionality. Make necessary adjustments based on the feedback.

Data Management: Set up a database or cloud storage system to store the collected data securely. Ensure that data is encrypted and backed up regularly.

Offline Setup (if applicable): Configure the software for offline use if your survey will be conducted in areas with limited internet connectivity. Ensure that collected data can be synchronized with the central database once a connection is available.

Interview Administration: Here’s the typical process for administering interviews using CAPI survey software:

a. Interviewer logs in to the software on the device. b. Selects the respondent from the list provided or enter respondent information. c. Administers the survey following the software’s guidance, which may involve reading questions aloud to the respondent and recording their responses.

Data Validation: The CAPI software may include data validation checks to catch real-time errors. Ensure that interviewers understand how to address validation errors during the interview.

Monitoring and Support: Supervisors can remotely monitor interviews in progress to provide assistance if needed. This helps maintain data quality and consistency.

Data Synchronization: If interviews were conducted offline, synchronize the collected data with the central database once an internet connection is available.

Data Analysis: Once data collection is complete, export the collected data from the CAPI software to a compatible format for analysis in statistical software.

Data Security and Privacy: Adhere to data protection and privacy regulations. Ensure that sensitive respondent data is handled securely and that proper encryption and access controls are in place.

Quality Assurance: Conduct regular data checks to identify inconsistencies or errors. Cross-reference collected data with source materials if necessary.

Documentation: Maintain detailed documentation of the survey design, software settings, and any issues encountered during the data collection. This documentation can aid in replication and future research.

Reporting: Generate reports or summaries based on the collected data to present your findings. Visualize the data using charts, graphs, and tables as needed.

Each CAPI software might have its own specific interface and workflow. It’s important to consult the software’s user guide or documentation for detailed instructions tailored to the software you’re using. Additionally, adapt these steps to suit your research goals, target population, and specific requirements.

Choosing the Right CAPI Software

Once you decide to use CAPI survey software for data collection, you’ll be faced with the dilemma of choosing the right one, as plenty are available in the market. No worries, we’re here to help. Read on.

Factors to consider when selecting CAPI software

Project Requirements and Scope: Understand the specific needs of your research project. Consider factors like the complexity of your survey, the type of data you’re collecting (quantitative, qualitative), the target audience, and the geographical locations where data will be collected.

User Interface and Ease of Use: The software’s interface should be intuitive and user-friendly for interviewers and respondents. Complex or confusing interfaces can lead to errors during data collection and increase training time for interviewers.

Compatibility with Different Devices: Ensure that the CAPI software is compatible with various devices, including smartphones, tablets, and PCs. This flexibility lets you choose the most suitable device for your data collection context.

Data Security and Encryption Measures: Data security is crucial to protect the confidentiality of respondent information. Check if the software provides robust encryption during data transmission and storage. Look for compliance with relevant data protection regulations.

Reporting and Analytics Features: Consider the reporting capabilities of the software. Can it generate real-time reports? Does it offer customizable data visualization options? Good reporting features can streamline your analysis process.

Customization Options for Questionnaires: The software should allow you to customize your questionnaire according to your research objectives. Check if it supports various question types (multiple choice, open-ended, Likert scale) and if it can handle complex skip patterns and branching.

Cost Considerations: Evaluate the software’s pricing structure. Some software options might have a one-time purchase fee, while others may charge based on usage or number of users. Factor in both upfront and ongoing costs.

Offline Capabilities: If data collection occurs in areas with limited internet connectivity, ensure that the software supports offline data collection. This allows interviewers to collect data without a live internet connection and sync it later.

Support and Training: Consider the level of customer support provided by the software company. Is there technical assistance available if interviewers encounter issues? Are training resources, tutorials, or documentation available?

Multimedia Integration: If your survey requires multimedia elements such as images, videos, or audio, ensure the software supports their integration. This can enhance respondent engagement and data accuracy.

Scalability: If your project involves many respondents or interviewers, ensure that the software can handle the scale without performance issues.

Data Backup and Recovery: Check if the software has data backup and recovery mechanisms. This is essential in case of device malfunctions, accidental data loss, or other unexpected situations.

Survey Logic and Skip Patterns: Ensure the software can handle complex survey logic and skip patterns. This is particularly important for surveys with conditional branching or skip instructions based on previous responses.

Language and Localization: If you’re conducting surveys in multiple languages or diverse regions, check if the software supports different languages and allows for the localization of survey content.

User Experience: It’s important to gather feedback from potential users about their experience with the software. Look for user reviews and testimonials to understand how the software performs in real-world scenarios.

By carefully evaluating each of these factors, you can choose a CAPI survey software that aligns with your research goals, maximizes data quality, and minimizes potential challenges during the data collection.

Read more

Text Analytics & AI

What Is Text Analytics?

Your business has access to countless data sources, including feedback from your clients, customers, employees, and vendors. These open-ended responses can be any text comments, such as social media posts, customer reviews, survey responses, and more, but analyzing it properly is challenging.Text analytics, also known as text mining or text analysis, is the process of extracting meaningful insights and patterns from unstructured text data. It involves various techniques from natural language processing (NLP), machine learning, and computational linguistics to analyze and understand the content of open end comments. The main goals of text analytics are to derive actionable insights, discover trends, and extract useful information from large volumes of text responses.

Key Components of Text Analytics

The text analytics process starts with a data set that has open end responses which may or may not include closed end responses, also called quantitative data. Text analytic solutions have the ability to work with data sets that are far too large to process manually, enabling your business to gain important research information that can drive your marketing strategies, customer service policies, budget allocation, product development, and countless other operations. Key components of text analytics include,

- Topic Analysis: Identifying topics or themes within the text data.

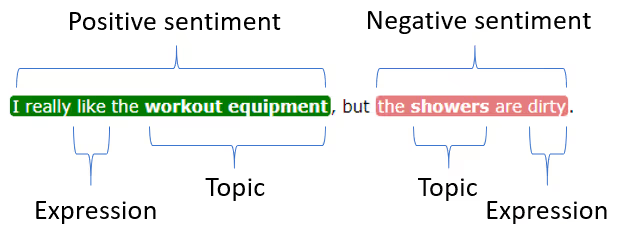

- Sentiment Analysis: Determining the sentiment (positive, negative, or neutral) expressed in the text.

- Clustering: Grouping similar texts together based on their content.

- Text Summarization: Generating a concise summary of the text data.

- Visualization: Visualizing the insights derived from text analytics through charts, graphs, word clouds, or other visual representations to facilitate interpretation.

How Is Text Analytics Used By Companies?

Text analytics is used by companies in various ways to extract valuable insights from large volumes of unstructured text data. Here are some key applications:

1. Customer Sentiment Analysis

- Purpose: Understand customer opinions and feelings about products or services.

- Application: Analyzing customer reviews, social media posts, and survey responses to gauge satisfaction and identify areas for improvement.

2. Industry Research

- Purpose: Identify market trends and consumer preferences.

- Application: Analyzing news articles, blogs, and online forums to understand industry dynamics and emerging trends.

3. Product Improvement

- Purpose: Enhance product features based on customer feedback.

- Application: Analyzing feedback from various channels to identify common issues and areas for improvement.

4. Customer Support

- Purpose: Improve customer support services.

- Application: Analyzing support tickets, chat logs, and email communications to identify common issues and streamline support processes.

5. Human Resources

- Purpose: Enhance employee experience and recruitment processes.

- Application: Analyzing employee feedback, performance reviews, and recruitment data to improve HR practices and employee satisfaction.

Capabilities of Text Analytics

Each text analytics tool has its own set of capabilities, but there are a number of features that you’ll commonly find in leading solutions on the market:

- Able to Analyze Both Structured & Unstructured Data

- Generates Clear & Descriptive Insights

- Processes Datasets of Any Size Quickly & Affordably

- Groups Data Together Logically

- Able to Drill Down to the Original Response

- Able to Trend Data

- Leverages the Latest AI Technologies

- Able to Adjust the Level of Generative AI by Project

- Produces Customizable Visualizations & Reports

- Offers Automatic Translation

- Enables Cross Tabs and Further Analysis

- Provides Data Scrubbing

- Has API Connectors

- Easily Imports & Exports Data

The Benefits of Text Analytics

Text analytics delivers many advantages to your organization. It's a critical part of extracting value from data sets with open end responses that you’re otherwise unable to process.

- Works with open end comments in many types of media or language.

- Gives you insights to improve experiences for customers, employees, and other stakeholders.

- Gives you insights to help increase your company’s revenue.

- Reduces the time and effort needed to analyze unstructured data.

- Gives you the data you need to better control your costs.

- Helps you make more data-driven decisions.

- Enables you to act quickly on new opportunities.

What’s the Difference Between Text Mining and Text Analytics?

Though often used interchangeably, text mining and text analytics have distinct meanings and applications. Text mining is the process of discovering patterns and extracting useful information from large sets of unstructured text data.It focuses on extracting information and knowledge from text using information extraction, categorization, clustering, association rule learning, and pattern recognition. The primary application of text mining is in data mining, where the goal is to uncover new patterns and insights.On the other hand, text analytics encompasses a broader range of techniques used to analyze text data and extract meaningful insights. It includes the interpretative aspect of the results obtained from text mining, focusing on applying and interpreting these results to solve specific business problems.Techniques used in text analytics include sentiment analysis, topic modeling, trend analysis, predictive analytics, and natural language processing (NLP). These techniques are employed in market research analysis, customer sentiment analysis, product review analysis, and other applications.

Find the Best Text Analytics Solution with Ascribe

If you are looking for a text analysis solution, check out CX Inspector with Theme Extractor and Generative AI. It’s our full-featured interactive software with generative AI that instantly analyzes open end responses, and lets you group ideas together, and explore data with sentiment, filters, crosstabs, and trend reports. Or contact us for a free demo with your data.

Read more

Text Analytics & AI

Text Mining Software and Text Analysis Tools

What is Text Mining

Businesses collect many types of text data, but it’s difficult to get value out of this information when manual handling is required to process it. It’s impossible to perform these duties at a scale that many companies require when you rely on manual processing, but text mining technology provides the automation needed to accomplish this goal.At its most basic level, text mining is an automated method of extracting information from written data. There are three major categories that text mining can fall under:Information extraction: The text analysis software can identify and pull information directly from the text, which is often presented in a natural language form. The software can find data that is the most important by structuring the written input and identifying any patterns that show up in the data set.Text analysis: This type of text mining analyzes the written input for various trends and patterns and prepares the data for reporting purposes. It relies heavily on natural language processing to work with this information, as well as other types of automated analysis. The business receives actionable insights into their unstructured data.Knowledge discovery and extraction: Data contained in unstructured sources is processed with machine learning and allows companies to quickly track down relevant and useful information contained in a variety of resources.Learn more about how text mining impacts business.

How is Text Mining Used

One of the most common ways to use text mining in a business environment is for sentiment analysis. This use case allows customer experience teams, research professionals, human resource teams, marketers, and other professionals to understand how their audience feels about specific questions or topics. Net Promoter Scores and similar surveys significantly benefit from text mining.In sentiment analysis, the data will either be positive, negative, or neutral. The software also determines how far in a certain direction this sentiment goes. You can use this information to guide your decision-making, respond to feedback from customers or employees, and to strengthen the data that you collect from other sources.The type of text mining needed for your business depends on the data that you’re working with, the information that you’re trying to get out of your written sources, and the end use of that analysis. Text mining tools come in many shapes and forms, and the right solution depends on many factors. Here are a few options to consider.

Best Free Text Mining Tools

When you’re not sure exactly how you want to use text mining for your organization, working with a free tool makes a lot of sense. You can experiment with a variety of options to see the ones that provide the best utility and will work with your current infrastructure. Here are some of the best free text mining tools on the market.

Aylien

Aylien is an API designed for analyzing text contained in Google Sheets and other text sources. You can set this up as a business intelligence tool that’s capable of performing sentiment analysis, labeling documents, suggesting hashtags, and detecting the language that a particular data set is in. One particularly useful feature is that it can use URLs as a source as well, and it’s designed to only extract the text from a web page rather than pulling in all of the content. Your organization would need a development team that can work with the API

Keatext

Keatext is an open source text mining platform that works with larger unstructured data sets. The system can do sentiment analysis without your company needing to configure a complete text mining solution, which can involve a lot of work on the backend if it’s not cloud-based. In addition to picking up on customer sentiment in the text, it also categorizes responses into broad topics: suggestions, questions, praise, and problems.

Datumbox

Datumbox is not strictly a text mining solution. Instead, it’s a Machine Learning framework in Java that has many capabilities that allow your company to leverage it for this purpose. It’s also an open source service. This platform groups its services into different applications, and here are the ones most relevant to text analysis software.

- Text Extraction

- Language Detection

- Sentiment Analysis

- Topic Classification

- Keyword Extraction

This robust framework offers a REST API to use these functions in your custom development projects. It includes many algorithms and models for working with unstructured data. It’s relatively straightforward to work with, although this is better for organizations that have custom development resources available.

KHCoder

KHCoder provides text mining capabilities that support a range of languages. Many text analysis software is limited in the language support available, which is not an ideal situation for companies that operate on a global level, or for those that are in regions where more than one language is spoken at a native level. KHCoder covers 13 major languages, ranging from Dutch to Simplified Chinese.

RapidMiner Text Mining Extension

RapidMiner Text Mining Extension is part of a comprehensive data science platform. This solution is designed for advanced users, such as data scientists and data engineers. You can extract useful information from written resources, including social media updates, research journals, reviews, and others. However, this platform may be overkill for organizations that are not trying to get into the nuts and bolts of data science. It's easy to get overwhelmed with the functionality, which results in a long implementation and training period.

Textable

Textable is an open source text mining software that focuses on visualizing the insights that you gain. For its basic text analysis functions, you can filter segments, create random text for sampling, and put expressions in place to automate segmenting text data.For more advanced text mining, you can use complex algorithms that include clustering, look at segment distribution, and leverage linguistic complexity analysis. It can also recode the text that you input into it as needed.This software is flexible and extendable, although it’s limited to smaller sets of data overall, making it better for smaller businesses than larger organizations. It’s compatible with many technologies, allowing you to use Python for additional scripting. It supports practically any text data format and encoding.Unlike many other free text mining solutions, Textable is relatively user-friendly and offers a visual interface and built-in functions that cover your typical text mining operations. It has significant support from the developer community, so if your company has questions, support is readily available. This is a relatively beginner-friendly option, with some good features for intermediate users as well.

Google Cloud Natural Language API

Google offers its own cloud-based Natural Language API for companies that are looking to leverage a robust set of functionalities offered by this tech giant. This is a machine learning platform that supports classification, extraction, and sentiment analysis.Google has pre-trained its natural language processing models in this solution, so you don’t have to go through that step before extracting your data. It’s deeply integrated with Google’s cloud storage solutions, which can be handy if you’re already using that as a key part of your infrastructure or you need an accessible location to store large volumes of text data.This text mining solution also supports audio analysis through the Speech-to-Text API and optical character recognition to quickly analyze documents scanned into the system. Another integration that can prove useful is being able to use the Google Translation API in order to get a sentiment analysis run on data sources with multiple languages.This solution is best for mid to large-sized companies that have advanced text analysis needs and the development team to support custom solutions and models. Smaller companies may not have the resources they need to get enough value out of this platform, and may not have users with enough technical knowledge to get everything set up and operational.

General Architecture for Text Engineering

General Architecture for Text Engineering, otherwise known as GATE, is a comprehensive text processing toolkit that equips development teams with the resources they need for their text mining needs.This toolkit requires a team capable of implementing it in the organization through custom development, and there is an active community surrounding it. If you want a strong toolkit for implementing text mining into your own applications, this is a good place to get started.

The Benefits of Premium Text Mining Tools

Free text mining solutions are useful for discovering what type of capabilities you need for your organization, but they often have a significant outlay for scaling it up to the level that your organization needs in a production environment. Premium text mining tools, such as the Ascribe Intelligence Suite, provide many benefits that provide a strong ROI that more than balances out the costs associated with implementing this solution. Here are several of the benefits that this premium text analysis suite brings to the table.

Code Verbatim Comments Quickly and Accurately

Ascribe Coder simplifies the process of categorizing large sets of verbatim comments. This computer assisted coding solution empowers your staff with the capabilities they need to be highly productive when working their way through survey responses, email messages, social media text, and other sources. Some ways that you can implement this in your organization is through testing advertising copy, studying your Net Promoter Score, working through employee engagement surveys, and doing a deep dive into what people are really saying in customer satisfaction surveys.

Gain Actionable Insights From Customer Feedback

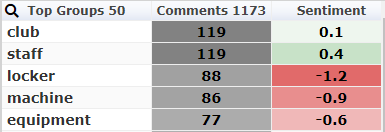

Ascribe CX Inspector goes through sets of verbatim comments and provides complete analysis and visualization, allowing your organization’s decision-makers to quickly act on this information. The automated functionality cuts down the time it takes to get usable insights from your unstructured data sets. An Instant Dashboard makes it easy to look at the information with different types of visualization, and the advanced natural language processing technology is incredibly powerful. Whether you want to use this to go through employee engagement surveys or fuel your Voice of the Customer studies, you have a robust tool on hand.

Automatically Learn More About Your Customer Experience

CX Inspector offers a highly customizable solution for researchers looking for an advanced text analytics utility. Both sentiment analysis and topic classification are included in this part of the suite, discovered with the help of natural language processing and AI. You get to better understand the “Why” behind why your customers gave you a particular score, and how you can improve.It includes many advanced features including removing personally identifiable information, supporting custom rulesets, working with unstructured and structured data, creating customized applications with an API connector, and results comparison.The interface is user-friendly and includes drag-and-drop control for developing custom taxonomies.It’s especially useful for companies who have many languages used in their verbatim comments – this solution supports 100 languages with automatic translation.

Better Understand Your Text Analysis Through Powerful Visualizations

Getting a lot of insights out of your text mining is the first step to truly using them to fuel a data-driven action plan. Ascribe Illustrator allows you to take the next step by providing your organization with powerful and flexible data visualizations that help non-technical stakeholders understand the analytics produced by text mining platforms.Here are a few of the many visualizations that you can use with this premium text mining software:

- Real-time visualization

- Correlation matrix

- Dynamic visualization

- Granular report detail

- Mirror charts

- Powerful filters

- Structured data integration

- Easily import and export data from multiple sources

- User-friendly dashboards

- Enhanced Word Clouds

- Heat maps

- Co-occurrence charts

While paying for a premium text analytics software may require an upfront investment, you end up getting a significantly higher value out of a platform like Ascribe than you would with free solutions. Your verbatim feedback and other text data contain some of the most valuable data available in your organization. It makes sense to work with a premium product that allows everyone from non-technical stakeholders to data scientists to effectively use it.

Read more

Text Analytics & AI

Natural Language processing (NLP) for Machine and Process Learning - How They Compare

Natural language is a phrase that encompasses human communication. The way that people talk and the way words are used in everyday life are part of natural language. Processing this type of natural language is a difficult task for computers, as there are so many factors that influence the way that people interact with their environment and each other. The rules are few and far between, and can vary significantly based on the language in question, as well as the dialect, the relationship of the people talking, and the context in which they are having the conversation.Natural language processing (NLP) is a type of computational linguistics that uses machine learning to power computer-based understanding of how people communicate with each other. NLP leverages large data sets to create applications that understand the semantics, syntax, and context of a given conversation.Natural language processing is an essential part of many types of technology, including voice assistance, chat bots, and improving sentiment analysis. NLP analytics empowers computers to understand human speech in text and/or written form without needing the person to structure their conversation in a specific way. They can talk or type naturally, and the NLP system interprets what they’re asking about from there.Machine learning is a type of artificial intelligence that uses learning models to power its understanding of natural language. It’s based off of a learning framework that allows the machine to train itself on data that’s been input. It can use many types of models to process the information and develop a better understanding of it. It’s able to interpret both standard and out of the ordinary inquiries. Due to its continual improvements, it’s able to handle these edge cases without getting tripped up, unlike a strict rules-based system.Natural language processing brings many benefits to an organization that has many processes that depend on natural language input and output. The biggest advantage of NLP technology is automating time-consuming processes, such as categorizing text documents, answering basic customer support questions, and gaining deeper insight into large text data sets.

Is Natural Language Processing Machine Learning?

It’s common for some confusion to arise over the relationship between natural language processing and machine learning. Machine learning can be used as a component in natural language processing technology. However, there are many types of NLP machines that perform more basic functionality and do not rely on machine learning or artificial intelligence. For example, a natural language processing solution that is simply extracting basic information may be able to rely on algorithms that don’t need to continually learn through AI.For more complex applications of natural language processing, the systems are using machine learning models to improve their understanding of human speech. Machine learning models also make it possible to adjust to shifts in language over time. Natural language processing may be using supervised machine learning, unsupervised machine learning, both, or neither alongside other technologies to fuel its applications.Machine learning can pick up on patterns in speech, identify contextual clues, understand the sentiment behind a message, and learn other important information about the voice or text input. Sophisticated technology solutions that require a high-level of understanding to hold conversations with humans require machine learning to make this possible.

Machine Learning vs. Natural Language Processing (NLP)

You can think of machine learning and natural language processing in a Venn diagram that has many pieces in the overlapping section. Machine learning has many useful features that help with the development of natural language processing systems, and both of them fall under the broad label of artificial intelligence.Organizations don’t need to choose one or the other for development that involves natural language input or output. Instead, these two work hand-in-hand to tackle the complex problem that human communication represents.

Supervised Machine Learning for Natural Language Processing and Text Analytics

Supervised machine learning means that the system is given examples of what it is supposed to be looking for so it knows what it is supposed to be learning. In natural language processing applications and machine learning text analysis, data scientists will go through documents and tag the important parts for the machine.It is important that the data fed into the system is clean and accurate, as this type of machine learning requires quality input or it is unable to produce the expected results. After a sufficient amount of training, data that has not been tagged at all is sent through the system. At that point, the machine learning technology will look at this text and analyze it based on what it learned from the examples.This machine learning use case leverages statistical models to fuel its understanding. It becomes more accurate over time, and developers can expand the textual information it interprets as it learns. Supervised machine learning does have some challenges when it comes to understanding edge cases, as natural language processing in this context relies heavily on statistical models.While the exact method that data scientists use to train the system varies from application to application, there are a few core categories that you’ll find in natural language processing and text analytics.

- Tokenization: The text gets distilled into individual words. These “tokens” allow the system to start by identifying the base words involved in the text before it continues processing the material.

- Categorization: You teach the machine about the important, overarching categories of content. The manipulation of this data allows for a deeper understanding of the context the text appears in.

- Classification: This identifies what class the text data belongs to.

- Part of Speech tagging: Remember diagramming sentences in English class? This is essentially the same process, just for a natural language processing system.

- Sentiment analysis: What is the tone of the text? This category looks at the emotions behind the words, and generally assigns it a value that falls under positive, negative, or neutral standing.

- Named entity recognition: In addition to providing the individual words, you also need to cover important entities. For some systems, this refers to names and proper nouns. In others, you’ll need to highlight other pieces of information, such as hashtags.

Unsupervised Machine Learning for Natural Language Processing and Text Analytics

Unsupervised machine learning does not require data scientists to create tagged training data. It doesn’t require human supervision to learn about the data that is input into it. Since it’s not operating off of defined examples, it’s able to pick up on more out-of-the-box cases and patterns over time. Since it’s less labor intensive than a supervised machine learning technique, it’s frequently used to analyze large data sets and broad pattern recognition and understanding of text.There are several types of unsupervised machine learning models:

- Clustering: Text documents that are similar are clustered into sets. The system then looks at the hierarchy of this information and organizes it accordingly.

- Matrix factorization: This machine learning technique looks for latent factors in data matrices. These factors can be defined in many ways, and are based on similar characteristics.

- Latent Semantic Indexing: Latent Semantic Indexing frequently comes up in conversations about search engines and search engine optimization. It refers to the relationship between words and phrases so that it can group related text together. You can see an example of this technology in action whenever Google suggests search results that include contextually related words.

Deep Learning

Another phrase that comes up frequently in discussions about natural language processing and machine learning is deep learning. Deep learning is artificial intelligence technology based on simulating the way the human brain works through a large neural network. It’s used to expand on learning algorithms, deal with data sets that are ever-increasing in size, and to work with more complex natural language use cases.It gets its name by looking deeper into the data than standard machine learning techniques. Rather than getting a surface-level understanding of the information, it produces comprehensive and easily scalable results. Unlike machine learning, deep learning does not hit a wall in how much it can learn and scale over time. It starts off by learning simple concepts and then builds upon this learning to expand into more complicated ones. This continual building process makes it possible for the machine to develop a broad range of understanding that’s necessary for high-level natural language processing projects.Deep learning also benefits natural language processing in improving both supervised and unsupervised machine learning models. For example, it has a functionality referred to as feature learning that is excellent for extracting information from large sets of raw data.

NLP Machine Learning Techniques

Text mining and natural language processing are related technologies that help companies understand more about text that they work with on a daily basis. The importance of text mining can not be underestimated.The type of machine learning technique that a natural language processing system uses depends on the goals of the application, the resources available, and the type of text that’s being analyzed. Here are some of the most common techniques you’ll encounter.

Text Embeddings

This technique moves beyond looking at words as individual entities. It expands the natural language processing system’s understanding by looking at what surrounds the text where it’s embedded. This information provides valuable context clues about the situation in which the word is being used, whether its meaning is changed from the base dictionary definition, and what the user means when they are using it.You’ll often find this technique used in deep learning natural language processing applications, or those that are addressing more complex use cases that require a better understanding of what’s being said. When this technique looks for contextually relevant words, it also automates the removal of text that doesn’t further understanding. For example, it doesn’t need to process articles such as “a” and “an.”One representation of text embeddings technique in action is with predictive text on cell phones. It’s attempting to predict the next word in the sequence, which it’s only able to do by identifying words and phrases that appear around it frequently.

Machine Translation

This technique allows NLP systems to automate the translation process from one language to another. It relies on both word-for-word translations and those that are able to identify and get context to facilitate accurate translations between languages. Google Translate is one of the most well-known use cases of this technique, but there are many ways that it’s used throughout the global marketplace.Machine learning and deep learning can improve the results by allowing the system to build upon its base understanding over time. It might start out with a supervised machine learning model that inputs a dictionary of words and phrases to translate and then grows that understanding through multiple data sources. This evolution over time allows it to pick up on speech and language nuances, such as slang.Human language is complex and being able to produce accurate translations requires a powerful natural language processing system that can work with both the base translation and contextual cues that lead to a deeper understanding of the message that is being communicated. It’s the difference between base translation and interpretation.In a global marketplace, having a powerful machine translation solution available means that organizations can address the needs of the international markets in a way that scales seamlessly. While you still need human staff to go through the translations to correct errors and localize the information for the end user, it takes care of a substantial part of the heavy lifting.

Conversations

One of the most common contexts that natural language processing comes up in is conversational AI, such as chatbots. This technique is focused on allowing a machine to have a naturally flowing conversation with the users interacting with it. It moves away from a fully scripted experience by allowing the bot to create a more natural sounding response that fits into the flow of the conversation.Basic chatbots can provide the users with information that’s based on key parts of the input message. They can identify relevant keywords within the text, look for phrases that indicate the type of assistance the user needs, and work with other semi-structured data. The user doesn’t need to change the way they typically type to get a relevant response.However, open-ended conversations are not possible on the basic end of things. A more advanced natural language processing system leveraging deep learning is needed for advanced use cases.The training data used for understanding conversations often comes from the company’s communications between customer service and the customers. It provides broad exposure to the way people talk when interacting with the business, allowing the system to understand requests made in a wide range of conversational styles and dialects. While everyone reaching out to the company may share a common language, their verbiage, slang, and writing voice can be drastically different from person to person.

Sentiment Analysis

Knowing what is being communicated depends on more than simply understanding the words being said. It’s also important to consider the emotions behind the conversation. For example, if you use natural language processing as part of your customer support processes, it’s important to know whether the person is frustrated and experiencing negative emotions. Sentiment analysis is the technique that brings this data to natural language processing.The signs that someone is upset can be incredibly subtle in text form, and requires a lot of data about negative and positive emotions in text-based form. This technique is useful when you want to learn more about your customer base and how they feel about your company or products. You can use sentiment analysis tools to automate the process for going through customer feedback from surveys to get a big picture view of their feelings.This type of system can also help you sort responses into those that may need a direct response or follow-up, such as those that are overwhelmingly negative. It’s an opportunity for a business to right wrongs and turn detractors into advocates. On the flip side, you can also use this information to determine people who would be exceptional customer advocates, as well as those who could use a little push to end up on the positive side of the sentiment analysis.The natural language processing system uses an understanding of smaller elements of the text to get to the meaning behind the text. It automates a process that can be incredibly painstaking to try to do manually.

Question Answering

Natural language processing is really good at automating the process of answering questions and finding relevant information by analyzing text from multiple sources. It creates a quality user experience by digging through the data to find the exact answer to what they’re asking, without requiring them to sort through multiple documents on their own or find the answer buried in the text.The key functions that NLP must be able to perform in order to answer questions include: understanding the question being asked, the context it’s being asked in, and the information that best addresses the inquiry. You’ll frequently see this technique used as part of customer service, information management, and chatbot products.Deep learning is useful for this application, as it can distill the information into a contextually relevant answer based on a wide range of data. It determines whether the text is useful for answering the inquiry, and the parts that are most important in this process.Once it goes through this sequence, it then needs to be assembled in natural language so the user can understand the information.

Text Summarization

Data sets have reached awe-inspiring sizes in the modern business world, to the point where it would be nearly impossible for human staff to manually go through the different information to create summaries of the data. Thankfully, natural language processing is capable of automating this process to allow organizations to derive value from these big data sets.There are a few aspects that text summarization needs to address with the use of natural language processing. The first is that it needs to understand and recognize the parts of the text that are the most important to the users accessing it. The type of information that is most-needed from a document would be drastically different for a doctor and an accountant.The information must be accurate and presented in a form that is short and easy to understand. Some real-world examples of this technique in use include automated summaries of news stories, article digests that provide a useful excerpt as a preview, and the information that is given in alerts in a system. The way this technique works is by scanning the document for different word frequencies. Words that appear frequently are likely to be important to understanding the full text. The sentences that contain these words are pulled out as the ones that are most likely to produce a basic understanding of the document, and it then sorts these excerpts in a way that matches the flow of the original.Text summarization can go a step further and move from an intelligent excerpt to an abstract that sounds natural. The latter requires more advanced natural language processing solutions that can create the summary and then develop the abstract in natural dialogue.

Attention Mechanism

Attention in the natural language processing context refers to the way visual attention works for people. When you look at a document, you are paying attention to different sections of the page rather than narrowing your focus to an individual word. You might skim over the text for a quick look at this information, and visual elements such as headings, ordered lists, and important phrases and keywords will jump out to you as the most important data.The Attention mechanism techniques build on the way people look through different documents. It operates on a hierarchy of the most important parts of the text while placing lesser focus on anything that falls outside of that primary focus. It’s an excellent way of adding relevancy and context to natural language processing. You’ll find this technique used in machine translation and creating automated captions for images.Are you ready to see what natural language processing can do for your business? Contact us to learn more about our powerful sentiment analysis solutions that provide actionable, real-time information based on user feedback.

Read more

Text Analytics & AI

Customer Experience Analysis: How to improve customer loyalty and retention

The global marketplace puts businesses in a position where you need to compete with organizations from around the world. Standing out on price becomes a difficult or impossible task, so the customer experience has moved into a vital position of importance. Customer loyalty and retention are tied to the way your buyers feel about your brand throughout their interactions. Customer experience analysis tools provide vital insight into the ways that you can address problems and lead consumers to higher satisfaction levels. However, knowing which type of tool to use and the ways to collect the data for them are important to getting actionable information.

Problems With Only Relying on Surveys for Customer Satisfaction Metrics

One of the most common ways of collecting data about the customer experience is through surveys. You may be familiar with the Net Promoter Score system, which rates customer satisfaction on a 1-10 scale. The survey used for this method is based off a single question — “How likely are you to recommend our business to others?” Other surveys have a broad scope, but both types focus on closed-ended questions. If the consumer had additional feedback on topic areas that aren't covered in the questions, you lose the opportunity to collect that data. Using open-ended questions and taking an in-depth look at what customers say in their answers gives you a deeper understanding of your positive and negative areas. Sometimes this can be as simple as putting a text comment box at the end. In other cases, you could have fill-in responses for each question.

How to Get Better Customer Feedback

To get the most out of your customer experience analysis tools, you need to start by establishing a plan to get quality feedback. Here are three categories to consider:

Direct

This input is given to your company by the customer. First-party data gives you an excellent look at what the consumers are feeling when they engage with your brand. You get this data from a number of collection methods, including survey results, studies and customer support histories.

Indirect

The customer is talking about your company, but they aren't saying it directly to you. You run into this type of feedback on social media, with buyers sharing information in groups or on their social media profiles. If you use social listening tools for sales prospecting or marketing opportunities, you can repurpose those solutions to find more feedback sources. Reviews on people's websites, social media profiles, and dedicated review websites are also important.

Inferred

You can make an educated guess about customer experiences through the data that you have available. Analytics tools can give you insight on what your customers do when they're engaging with your brand. Once you're collecting customer data from a variety of sources, you need a way to analyze it properly. A sentiment analysis tool looks through the customer information to tell you more about how they feel about the experience and your brand. While you can try to do this part of the process manually, it requires an extensive amount of human resources to accomplish, as well as a lot of time.

Looking at Product-specific Customer Experience Analytics

One way to use this information to benefit customer loyalty and satisfaction is by analyzing it on a product-specific basis. When your company has many offerings for your customers, looking at the overall feedback makes it difficult to know how the individual product experiences are doing. A sentiment analysis tool that can sort the feedback into groups for each product makes it possible to look at the positive and negative factors influencing the customer experience and judge how to improve sentiment analysis. Some of the information that you end up learning is whether customers want to see new features or models with your products, if they've responded to promotions during the purchase process, and if products may need shelves or need to be completely reworked.

Improving the Customer Experience for Greater Loyalty

If you find that your company isn't getting a lot of highly engaged customer advocates, then you may be running into problems generating loyalty. To get people to care more about your business, you need to fully understand your typical customers. Buyer personas are an excellent tool to keep on hand for this purpose. Use data from highly loyal customers to create profiles that reflect those characteristics. Spend some time discovering the motivations and needs that drive them during the purchase decision. When you fully put yourself in the customer's shoes, you can begin to identify ways to make them more emotionally engaged in their brand support. One way that many companies drive more loyalty is by personalizing customer experiences. You give them content, recommendations and other resources that are tailored to their lifestyle and needs.

Addressing Weak Spots in Customer Retention

Many factors lead to poor customer retention. Buyers may feel like the products were misrepresented during marketing or sales, they could have a hard time getting through to customer support, or they aren't getting the value that they expected. In some cases, you have a product mismatch, where the buyer's use case doesn't match what the item can accomplish. A poor fit leads to a bad experience. Properly educating buyers on what they're getting and how to use it can lead to people who are willing to make another purchase from your company. You don't want to center your sales tactics on one-time purchases. Think of that first purchase as the beginning of a long-term relationship. You want to be helpful and support the customer so they succeed with your product lines. Sometimes that means directing them to a competitor if you can't meet their needs. This strategy might sound counterintuitive, but the customers remember that you went out of your way to help them, all the way up to sending them to another brand. They'll happily mention this good experience to their peers. If their needs change in the future, you could end up getting them back. Customer loyalty and retention are the keys to a growing business. Make sure that you're getting all the information you need out of your feedback to find strategies to build these numbers up.

Read more

Text Analytics & AI

How to Interpret Survey Data: A Step-by-Step Guide for Better Insights

How to Interpret Survey Data: A Step-by-Step Guide for Better Insights

Interpreting survey data is a multi-step process that requires significant human, technological, and financial resources. But the investment pays off when teams can act on the findings — improving experiences, fixing problems, and ultimately delighting customers.

The type of survey or question used sets the direction for respondents and shapes the resulting data. Understanding the different types of survey questions, and the insights they offer, is critical to interpreting responses accurately.

Step One: Choose the Right Type of Survey

Before launching a survey, it's important to review its goal and the type of questions selected. This sets expectations for the kind of data that will be collected and the type of analysis required.

Here are some common types of survey questions:

- Net Promoter Score (NPS)

The Net Promoter Score measures how likely a customer is to recommend a brand to others. Respondents provide a rating, typically on a scale from 0–10, answering the question: "How likely are you to recommend [business or brand] to others?"

The resulting score provides insight into brand loyalty and word-of-mouth strength. Some NPS surveys include a follow-up open-ended question, allowing customers to explain the reasons behind their score. These verbatim responses offer deeper insight into strengths and areas for improvement.

- Post-Purchase Follow-Up

After a purchase, customers are asked how satisfied they are with the product, service, or overall buying experience. These surveys help companies identify and fix weak points in the customer journey — ideally before dissatisfaction leads to churn. Proactively addressing concerns also opens opportunities to turn a negative experience into a positive one.

- Customer Support Follow-Up

When customers contact a support team, follow-up surveys assess whether their problem was resolved. This not only measures the effectiveness of customer service but can also surface recurring issues in products or services that need attention.

- New Products and Features

Surveys conducted during product development — before launch — gather valuable input that can save time and resources.