The Voxco Answers Anything Blog

Read on for more in-depth content on the topics that matter and shape the world of research.

Inspire. Learn. Create.

Text Analytics & AI

AI & Open End Analysis

How to Choose the Right Solution

The Latest in Market Research

Market Research 101

Text Analytics & AI

Customer Experience Analysis - How to improve customer loyalty and retention

The global marketplace puts businesses in a position where you need to compete with organizations from around the world. Standing out on price becomes a difficult or impossible task, so the customer experience has moved into a vital position of importance. Customer loyalty and retention are tied to the way your buyers feel about your brand throughout their interactions. Customer experience analysis tools provide vital insight into the ways that you can address problems and lead consumers to higher satisfaction levels. However, knowing which type of tool to use and the ways to collect the data for them are important to getting actionable information.

Problems With Only Relying on Surveys for Customer Satisfaction Metrics

One of the most common ways of collecting data about the customer experience is through surveys. You may be familiar with the Net Promoter Score system, which rates customer satisfaction on a 1-10 scale. The survey used for this method is based off a single question — “How likely are you to recommend our business to others?” Other surveys have a broad scope, but both types focus on closed-ended questions. If the consumer had additional feedback on topic areas that aren't covered in the questions, you lose the opportunity to collect that data. Using open-ended questions and taking an in-depth look at what customers say in their answers gives you a deeper understanding of your positive and negative areas. Sometimes this can be as simple as putting a text comment box at the end. In other cases, you could have fill-in responses for each question.

How to Get Better Customer Feedback

To get the most out of your customer experience analysis tools, you need to start by establishing a plan to get quality feedback. Here are three categories to consider:

Direct

This input is given to your company by the customer. First-party data gives you an excellent look at what the consumers are feeling when they engage with your brand. You get this data from a number of collection methods, including survey results, studies and customer support histories.

Indirect

The customer is talking about your company, but they aren't saying it directly to you. You run into this type of feedback on social media, with buyers sharing information in groups or on their social media profiles. If you use social listening tools for sales prospecting or marketing opportunities, you can repurpose those solutions to find more feedback sources. Reviews on people's websites, social media profiles, and dedicated review websites are also important.

Inferred

You can make an educated guess about customer experiences through the data that you have available. Analytics tools can give you insight on what your customers do when they're engaging with your brand. Once you're collecting customer data from a variety of sources, you need a way to analyze it properly. A sentiment analysis tool looks through the customer information to tell you more about how they feel about the experience and your brand. While you can try to do this part of the process manually, it requires an extensive amount of human resources to accomplish, as well as a lot of time.

Looking at Product-specific Customer Experience Analytics

One way to use this information to benefit customer loyalty and satisfaction is by analyzing it on a product-specific basis. When your company has many offerings for your customers, looking at the overall feedback makes it difficult to know how the individual product experiences are doing. A sentiment analysis tool that can sort the feedback into groups for each product makes it possible to look at the positive and negative factors influencing the customer experience and judge how to improve sentiment analysis. Some of the information that you end up learning is whether customers want to see new features or models with your products, if they've responded to promotions during the purchase process, and if products may need shelves or need to be completely reworked.

Improving the Customer Experience for Greater Loyalty

If you find that your company isn't getting a lot of highly engaged customer advocates, then you may be running into problems generating loyalty. To get people to care more about your business, you need to fully understand your typical customers. Buyer personas are an excellent tool to keep on hand for this purpose. Use data from highly loyal customers to create profiles that reflect those characteristics. Spend some time discovering the motivations and needs that drive them during the purchase decision. When you fully put yourself in the customer's shoes, you can begin to identify ways to make them more emotionally engaged in their brand support. One way that many companies drive more loyalty is by personalizing customer experiences. You give them content, recommendations and other resources that are tailored to their lifestyle and needs.

Addressing Weak Spots in Customer Retention

Many factors lead to poor customer retention. Buyers may feel like the products were misrepresented during marketing or sales, they could have a hard time getting through to customer support, or they aren't getting the value that they expected. In some cases, you have a product mismatch, where the buyer's use case doesn't match what the item can accomplish. A poor fit leads to a bad experience. Properly educating buyers on what they're getting and how to use it can lead to people who are willing to make another purchase from your company. You don't want to center your sales tactics on one-time purchases. Think of that first purchase as the beginning of a long-term relationship. You want to be helpful and support the customer so they succeed with your product lines. Sometimes that means directing them to a competitor if you can't meet their needs. This strategy might sound counterintuitive, but the customers remember that you went out of your way to help them, all the way up to sending them to another brand. They'll happily mention this good experience to their peers. If their needs change in the future, you could end up getting them back. Customer loyalty and retention are the keys to a growing business. Make sure that you're getting all the information you need out of your feedback to find strategies to build these numbers up.

2/10/20

Read more

Text Analytics & AI

Machine Learning for Text Analysis

“Beware the Jabberwock, my son!

The jaws that bite, the claws that catch!

Beware the Jubjub bird, and shun

The frumious Bandersnatch!”

— Lewis Carroll

Verbatim coding seems a natural application for machine learning. After all, for important large projects and trackers we often have lots of properly coded verbatims. These seem perfect for machine learning. Just tell the computer to learn from these examples, and voilà! We have a machine learning model that can code new responses and mimic the human coding. Sadly, it is not that simple.

Machine Learning Basics

To understand why, you need to know just a bit about how machine learning works. When we give the computer a bunch of coded verbatims and ask it to learn how to code them we are doing supervised machine learning. In other words, we are not just asking the computer to “figure it out”. We are showing it how proper coding is done for our codeframe and telling it to learn how to code similarly. The result of the machine learning process is a model containing the rules based on what the computer has learned. Using the model, the computer can try to code new verbatims in a manner that mimics the examples from which it has learned.

There are many supervised machine learning tools with different topics, information, algorithms, and features out there. Amazon Web Services offers several, which you can read about here if you have interest. There are even machine learning engines developed specifically for coding survey responses. One of these was offered by Ascribe for several years. The problem with these tools is that the machine learning model is opaque. You can’t look inside the model to understand why it makes its decisions.

Problems with Opaque Machine Learning Models

So why are opaque models bad? Let’s suppose you train up a nice machine learning model for a tracker study you have fielded for several waves. In comes the next wave, and you code it automatically with the model. It won’t get it perfect of course. You will have some verbatims where a code was applied when it should not have been. This is a False Positive, or an error of commission. You will also find verbatims where a code was not applied that should have been. This is a False Negative, or error of omission. How do we now correct the model so that it does not make these mistakes again?

Correcting False Negatives

Correcting false negatives is not too difficult, at least in theory. You just come up with more examples of where the code is correctly applied to a verbatim. You put these new examples in the training set, build the model again, and hopefully the number of these false negatives decreases. Hopefully. If not, you lather, rinse, and repeat.

Correcting False Positives

Correcting false positives can get maddening. For this you need more examples where the code was correctly not applied to a verbatim. But what examples? And how many? In practice the number of examples to correct false positives can be far larger than to correct false negatives.

The Frumious Downward Accuracy Spiral

One of the promises of machine learning is that over time you have more and more training examples, so you can retrain the model with these new examples and the model will continue to improve, right? Wrong. If you retrain with new examples provided by using your machine learning model the accuracy will go down, not up. Text mining and natural language processing are related technologies that help companies understand more about text analytics that they work with on a daily basis. Using examples containing mistakes from the natural language machine learning model reinforces those mistakes. You can avoid this by thoroughly checking the different verbatims coded within the machine learning tool and using these corrected examples to retrain the model. But who is going to do that? This error checking takes a good fraction of the time that would be required to simply manually code the verbatims. The whole point of using natural language machine learning is not to spend this time and to make the best use of your time through the help and use of NLP. So, either the model gets worse over time, or you spend nearly as much labor and work on your training set as if you were not using the machine learning tool.

Learn more about how text mining can impact businesses.

Explainable Machine Learning Models

It would be great if you could ask the machine learning model why it made the decision that led to a coding error. If you knew that, at least you would be in a better position to conjure up new training examples within machine learning text analysis to try to fix the problems. It would be even better if you could simply correct that decision logic in the model without needing to retrain the model or find new training examples.

This has led different companies like IBM to research Explainable AI tools. The IBM AI Explainability 360 project is an example of this work, but other researchers are also pursuing this concept.

At Ascribe we are developing a machine learning tool that is both explainable and correctable. In other words, you can look inside the machine learning model to best understand why it makes its decisions, and you can correct these decisions as well if needed.

Structure of an Explainable Machine Learning Model for Verbatim Coding

For verbatim coding, we need a machine learning model that contains a set of rules for each code within the codeframe. For a given verbatim, if a rule for a code matches the verbatim, then the code is applied to the verbatim. If the rules are written in a way that humans can understand, then we have a machine learning model that can be understood and if necessary corrected.

The approach we have taken at Ascribe is to create rules using regular expressions. To be precise, we use an extension of regular expressions as explained in this post. A simplistic way to approach this would be to simply create a set of rules that attempt to match the verbatim directly. This can be a reasonable approach, particularly for short format verbatim responses. We can do better than that, however. A far more powerful approach to support this sentiment analysis is to first run the verbatims through natural language processing (NLP). This results in findings consisting of topics, expressions, extracts, and sentiment scores. Each verbatim comment can produce many findings, containing these NLP elements, which we call the facets of the finding. Natural language processing works well across the board for processing sentiment analysis and understanding the text analysis results to build algorithms and different features.

You can read more about NLP in this blog post, but for our purposes we can define the facets of a finding as:

- Verbatim: the original comment itself.

- Topic: what the person is talking about. Typically a noun or noun phrase.

- Expression: what the person is saying about the topic. Often a verb or adverbial phrase.

- Extract: the portion of the verbatim that yielded the topic and expression, with extraneous sections such as punctuation removed.

- Sentiment score: positive or negative if sentiment is expressed, or empty if there is no expression of sentiment.

Rules in the Machine Learning Model

Armed with the NLP findings for a verbatim we can build regular expression rules to match the facets of each NLP finding to build accurate results. A rule has a regular expression for each facet in the text analysis. If the regular expression for a facet is empty it always matches that facet. Each rule must have a regular expression for at least one facet in the text analysis. If there are multiple regular expressions for the facets of a rule, they must all match the NLP finding to produce a positive match in the algorithm.

Let’s look at a specific example. Suppose we have a code in our codeframe:

- Liked advertisement / product description

Two appropriate rules using the Topic and Expression of the NLP findings would be:

The first rule matches a verbatim where the respondent likes the product description, and the second a verbatim where the respondent likes the ad or advertisement. The | character in the second rule means “OR” in a regular expression.

The key point here is that we can look at the rules and aid in understanding exactly why these rules will code a specific verbatim. This is the explainable part of the model. Moreover, we can change the model “by hand”. It may be that in our set of training examples no respondent said that she loved the ad. Still, we might want to match such verbatims to this code. We can simply change the expressions to:

like|love

to match such verbatims. This is the correctable part of the model.

Applying the Machine Learning Model

When we want to use our machine learning model to code a set of uncoded verbatims, the computer performs these steps:

For newly created models you can be sure that it will not code all the verbatims correctly. You will want to check and correct the coding. Having done that, you should explore the model to tune it so that it does not make these mistakes again.

Exploring the Machine Learning Model

The beauty of an explainable machine learning model is that you can understand exactly how the model makes its decisions. Let’s suppose that our model has applied a code incorrectly. This is a False Positive. Assuming we have a reasonable design surface for the model we can look at the verbatim and discover exactly what rule caused it to apply the code incorrectly. Once we know that we can change the rule to help prevent it from making that incorrect decision.

The same is true when the model does not apply a code that it should, a False Negative. In this case we can look at the rules for the code, and at the findings for the verbatim analysis and add a new rule that will match and support the verbatim. Again, a good design surface is needed here. We want to be able to verify that our rule correctly matches the desired verbatim, but we also want to check whether the new rule introduces any False Positives in other verbatims.

Upward Accuracy Spiral

If you are following closely you can see that the model could be constructed without the aid of the computer. A human could create and review all of the rules by hand. We must emphasize that in our approach the model is created by the computer completely automatically.

With a traditional opaque machine learning model, the only tool you have to improve the model is to improve the training set. With the approach we have outlined here that technique remains valid. But in addition, you can understand and correct problems in the model without curating a new training set. How you elect to blend these approaches is up to you, but unless you spend the time to do careful quality checking of the training set manual correction of the model is preferred. This avoids the frumious downward accuracy spiral.

With a new model you should inspect and correct the coding each time the model is used to code a new set of verbatims, then explore the model and tune it to avoid these mistakes. After a few such cycles you may pronounce the model to have acceptable accuracy and forego this correction and tuning cycle.

Mixing in Traditional Machine Learning

The explainable machine learning model we have described has the tremendous advantage of putting us in full control of the model. We can tell exactly why it makes its decisions and correct it when needed. Unfortunately, this comes at a price relative to traditional machine learning.

Traditional machine learning models do not follow a rigid set of rules like those we have described above. They use a probabilistic approach. Given a verbatim to code, a traditional machine learning model can make the pronouncement: “Code 34 should be applied to this verbatim with 93% confidence.” Another way to look at this is that the model says: “based on the training examples I was given my statistical model predicts Code 34 with 93% probability.”

The big advantage to this technique is that this probabilistic approach has a better chance of matching verbatims that are similar to the training examples. In practice, the traditional machine learning approach can increase the number of true positives but will also increase the number of false positives. To incorporate traditional machine learning into our approach we need a way to screen out these false positives.

At Ascribe, we are taking the approach of allowing the user to specify that a traditional model may be used in conjunction with the explainable model. Each of the rules in the explainable model can be optionally marked to use the predictions from the traditional model. Such rules apply codes based on the traditional model but only if the other criteria in the rule are satisfied.

Returning to the code:

- Liked advertisement / product description

We could write a rule that says: “use the traditional machine learning model to predict this code but accept the prediction only if the verbatim contains one of the words “ad”, “advertisement”, or “description”. In this way we gained control over the false positives from the traditional machine learning model. We gain the advantages of the opaque traditional machine learning model but remain in control.

Machine Learning for Verbatim Coding

Using machine learning to decrease manual labor in verbatim coding has been a tantalizing goal for years. The industry has seen several attempts end with marginal labor improvements coupled with decreased accuracy.

Ascribe Coder is today the benchmark for labor savings, providing several semi-automated tools for labor reduction. We believe the techniques described above will provide a breakthrough improvement in coding efficiency at accepted accuracy standards.

A machine learning tool that really delivers on the promise of speed and accuracy has many benefits for the industry. Decreased labor costs is certainly one advantage. Beyond this, project turn-around time can be dramatically reduced. We believe that market researchers will be able to offer the advantages of open-end questions in surveys where cost or speed had precluded them. Empowering the industry to produce quality research faster and cheaper has been our vision at Ascribe for two decades. We look forward to this next advance in using open-end questions for high-quality, cost effective research.

1/30/20

Read more

Text Analytics & AI

Interpreting Survey Results

Interpreting survey data is an important multi-step process requiring significant human, technology, and financial resources. This investment pays off when teams are able to change directions, delight customers, and fix problems based on the information gained from the results.The type of survey or question sets the direction for respondents and for the resulting survey data. Understanding the options and what kind of perspectives they can reveal is critical to accurately interpreting the responses to the questions.

Types of Survey Questions

Before beginning, review the survey’s goal and the type of survey questions selected. This will help when interpreting the results and determining next steps. The following are frequently used survey types, though many more options exist.

The Net Promoter Score (NPS)

NPS is a widely used survey question that provides insights on how happy customers are with a brand. The result is a number on a scale which answers the primary question “How likely are you to recommend [business or brand] to others in your social circle?” Thus the NPS reports how strong a brand’s word-of-mouth message is at a given time.Some NPS questions follow up with an open-ended comments box. This allows customers to explain or identify the factors that influenced their choice. These verbatim NPS comments can help organizations hone in on where their products or services are exceeding expectations and where things may be breaking down.

Post-Purchase Follow-Up

Asking customers about how satisfied they are with the purchasing process and/or with products or services after they make a purchase can be helpful for businesses seeking to improve their customer journey. Questions include how satisfied the customer is with the experience, how happy they are with their purchase, and whether they’ve had any challenges getting the most out of their purchase.This type of survey helps companies prevent customer unhappiness by improving the path to purchase, identifying weaknesses in the product or service that can be addressed, and asking questions to open up a conversation with unhappy customers early. Proactive customer support can transform a customer’s negative experience into a positive one and create a strong advocate in that customer.

Customer Support Contact Follow-Up

When customers contact a support resource, this kind of survey asks whether the customer’s problem has been fixed. It shows the ability of customer support to solve problems and identifies when the problem isn’t user error but inherent issues within the product, service or customer experience. Knowing this answer helps organizations address the right issue at the right time so their customers remain happy even after they purchase and begin using a product or service.

New Products and Features

A product may perform perfectly within the controlled environment of a lab or development area, only to flounder when tested in a real environment. Inviting customer feedback during the planning stages is important because it can save a company significant time and money as they bring a new product, service, or feature to the market. In addition, businesses satisfy their customers better when they develop the updates and features that their customers want. Asking for input from customers allows companies to see which updates are most important and therefore belong higher on the priority list.

Step Two: Organize the Survey Data and Results

After choosing the type of survey, it’s time to deploy it and review the results. Depending on the survey chosen, organizations may receive only numerical data (such as a net promoter score), numerical and comment data, or comment (textual) data only.When data results come in, organizing it first helps facilitate further data analysis. Questions to consider when organizing data include:

- Did the survey target a particular product or customer segment?

- Did the survey go to people making an initial purchase or to repeat customers (who are already at least somewhat loyal to the business)?

- What are the unique characteristics that separate data sets from one another? Tag the feedback based on these criteria in order to compare and contrast the results of multiple surveys.

- What overall themes appear in the survey data? Is there a commonality amongst respondents? Do a majority of the responses focus on a particular feature or customer service issue? A significant number of references to the same topic indicates something that is particularly positive or negative.

- Is the emotion in the verbatim comments positive or negative? This is sentiment analysis of text responses and gives context to numerical rankings. Sentiment analysis results can show where businesses need to focus improvement effort.

Step Three: Analyze and Interpret the Data

Once the data is organized, the next step is to analyze it and look for insights. Until recently, data analysis required manual processing using spreadsheets or similar applications. Not only is manual analysis tedious and time-consuming (taking weeks or months with large data sets), it is also inconsistent.Manual analysis requires human coders to review all of the data. No two people will review information the same way. The same person may assess it differently on any given day as well. Too many factors can change how an individual assess a set of data, from their physical wellbeing (Hungry? Tired? Coming down with a cold?) to their emotional state. This inconsistency makes it challenging to detect trends and significant changes over time (which businesses need in order to determine whether improvement efforts are working).

Text Analysis Tools

Text analysis tools automate many tasks associated with reviewing survey data, including recognizing themes and topics, determining whether a response indicates positive or negative emotion (sentiment), and identifying categories for this information.Today, companies like Ascribe have developed robust and extremely efficient survey analysis tools that automate the process of reviewing text. Ascribe’s software solutions include sentiment analysis of verbatim comments, which offers greater visibility into customer feedback in minutes, not weeks. Advanced text analytics solutions such as Ascribe's CX Inspector with X-Score can deliver high-level topic analysis or dig deep into sentiment using accelerated workflows that deliver actionable insights sooner.CX Inspector can analyze and synthesize data from multiple channels in addition to surveys, such as social media, customer panels, and customer support notes. This creates a multidimensional big picture of an organization’s relationship with its customers, patients, employees, etc. These tools also drill down into the information through topics and categories. For companies with international products and services, Ascribe’s text analysis and sentiment analysis tools can also perform multi-lingual analysis.X-Score is a patented approach to customer measurement that provides a customer satisfaction score derived from people’s authentic, open-ended comments about their experience. X-Score highlights key topics driving satisfaction and dissatisfaction, helping identify the actions needed to improve customer satisfaction quickly and easily. In this way, companies can cut the number of questions asked in half and reduce the size of the data set without compromising on the quality of data they receive.

How Automated Text Analysis Tools Work

Ascribe’s cutting-edge suite of solutions work by combining Natural Language Processing (NLP) and Artificial Intelligence (AI). This powerhouse duo can sift through slang, regional dialects, colloquialisms, and other non-standard writing using machine learning. The software uses machine learning to interpret meaning and recognize the emotions behind the words, all without human intervention.CX Inspector improves data quality by removing gibberish and profanity, and the software also can improve data security by removing personally identifiable information.

What to Do with Survey Results

The reports provided by CX Inspector appear in easy-to-read charts and graphs. Visuals highlight the challenges that respondents face with an organization’s products or services. They also draw attention to high-functioning areas receiving consistently positive feedback. These reports can reveal repeated topics, trends, and questions, eliminating guesswork and allowing companies to resolve specific complaints about a product or service that comes directly from respondents. Positive feedback highlights strengths and helps businesses capitalize on them (and avoid making changes to things customers are happy with).Neutral sentiment can also be an action item, as it identifies customers who are either apathetic or ambivalent about their experiences with a brand. This category of customer may respond well to extra attention, or they may simply not be the ideal customer. Knowing which is true can keep a business from wasting effort on customers they cannot please and help them stay focused on the customers they can.People like to feel heard and to know that their opinions are valued by the companies and brands they do business with. Surveys and reviews give them the opportunity to give feedback. But surveys by themselves do not satisfy customers, patients, and employees. The highest value of these surveys comes from interpreting the data correctly, learning from it, and using it to improve experiences, loyalty, and retention.

9/25/19

Read more

Text Analytics & AI

Using Sentiment Analysis to Improve Your Organization’s Satisfaction Score

Whether it’s a rating, ranking, score, review, or number of stars/tomatoes/thumbs up, consumers have ample opportunities to report their experiences with businesses, service providers, and organizations in the form of survey responses and other user-generated content. The challenge for organizations remains processing this high volume of data, finding positive and negative sentiment, identifying opportunities for improvement, and tracking the success of improvement efforts.

What Sentiment Scores Can’t Tell You

Numbers without context cannot inform real improvements. If an organization receives a sentiment score of 72, the leadership team may recognize that the score is good or bad but have no information about what is influencing it either way. Worse still, a simple number does not reveal what to do to raise it.A rational approach to improving is to look at a sentiment score and ask, “What is contributing to that number? What’s keeping us from earning a higher one?”Analyzing the user-generated content and text-based survey results that accompany numbers, scores, and ratings provides that context and helps guide efforts towards the most significant changes to make. However, manual analysis of text is extremely time-consuming and nearly impossible to do consistently. Without a systematic and efficient process in place, organizations will remain largely in the dark about how to improve their constituents’ experiences.When Ascribe launched automated text analytics programsCX Snapshot and CX Inspector with X-Score™, they decreased the time it takes to review comments from weeks to hours. These programs perform text sentiment analysis using natural language processing (NLP) and report findings using visuals (charts and diagrams) to highlight trends, trouble spots, and strengths.X-Score is a patented approach to customer measurement that provides a customer satisfaction score derived from people’s authentic, open-ended comments about their experience. X-Score highlights key topics driving satisfaction and dissatisfaction, helping identify the actions needed to improve customer satisfaction quickly and easily. In this way, companies can cut the number of questions asked in half and reduce the size of the data set without compromising on the quality of data they receive.

Consumer Glass Company Gains Sentiment Data within Hours with Automated Text Analysis

A major consumer glass company serves more than 4 million customers each year across the United States. They received 500,000 survey responses, which produced massive data sets to review. They needed to interpret the verbatim comments and connect them with other data, like Net Promoter Scores® (NPS). This would enable them to understand exactly how to delight every customer every time.The company tried a standard text analytics software, but its results were inconsistent. The system took days or weeks to model the data and could not connect the sentiment analysis with their NPS data.Within a week of obtaining Ascribe’s natural language processing tool CX Inspector with X-Score, the software was delivering exactly the kind of analysis needed. CX Inspector now reviews customer feedback comments from surveys, social media, and call center transcriptions, and the company has a strategic way to use the data and trust it to inform sound business decisions. Their customer and quality analytics manager says,

“NPS is a score, and you don’t know what’s driving that score. But when you can see how it aligns with sentiment, then that informs you as to what might be moving that NPS needle. In every instance where I compared CX Inspector output with NPS, it was spot-on directionally, so it gives us a lot of confidence in it – and that also helps to validate the NPS.

“Without a text analytical method like CX Inspector, it is extremely difficult to analyze this amount of data. Ascribe provides a way to apply a consistent approach. This really allows us to add a customer listening perspective to our decision making.”

How Sentiment Analysis Informs Your Organization’s Improvement Efforts

Reviewing the verbatim comments of individuals who have experienced a product or service provides essential data for teams looking for trouble spots, trends, and successes. This analysis of text reveals specific areas of service that are suffering even if other areas are doing well.For example, text sentiment analysis can find consistently satisfied customers in nine of the interactions they have with an organization, and also highlight that tenth interaction that’s consistently unsatisfactory.Having this detail empowers organizations to focus their efforts on the handful of areas contributing most to the unhappiness of the people involved (whether they’re employees, customers, patients, etc.). By implementing change and then checking current against previous scores and sentiment, organizations can also measure their progress.

Regional Bank Experiences 60% Decrease in Processing Time

Recently a regional bank approached Ascribe in search of a more efficient and effective tool for finding positive and negative sentiment from customer feedback and analyzing it for specific changes that would enhance their customers’ experiences. They had been consuming thousands of man-hours reading, categorizing, and analyzing comments. Yet they still struggled to identify the most important themes and sentiment, and the manual process was inconsistent and unable to show trends over time.In minutes, Ascribe’s CX Snapshot processed a year’s worth of customer feedback gathered from all channels. It identified trends the team had been unable to unlock using their manual processes, and it quickly identified steps to take to improve the customer experience. The repeatable methodology ensured consistent analysis from month to month, making it possible to track trends over time.A senior data scientist on the bank’s staff says,

“This new approach is repeatable, powerful, and it expedites our ability to act on the voice of the customer.”

How Asking Better Questions Improves Insights

Another way to improve the efficiency and effectiveness of surveys is to ask questions in a better way, which reduces the number of questions needed. This works because it captures better data, as the consumer is not being forced to respond to leading questions. For example, asking, “What did you not like?” could lead consumers to provide a negative answer even though their overall experience was very positive. "Tell us about your experience" is more neutral and allows for consumers to give either a positive or negative response.Asking fewer and better questions also reduces interview fatigue, thereby increasing the number of completed surveys and improving the survey-taking experience. It recognizes that people who choose to fill out a questionnaire usually have something very specific to share. Rather than making people work to fit their comments into question responses, "Tell us about your experience" invites consumers to immediately provide their feedback.Ascribe’s text analytics tools help researchers in both of these areas. Often, all the feedback needed can come from this single open-ended question: “Tell us how we’re doing.”

Recommendations

Sentiment analysis tools are an essential component of an organization’s improvement efforts. A robust and strategic approach will automate surveying and the processing of user-generated content like reviews, social media posts, and other verbatim comments. Using machine learning, natural language processing, and systems that visualize the positive and negative themes that emerge can empower organizations to make decisions based on data-driven insights. Today, this kind of automated analysis can review massive data sets within hours and inform organizations of the specific steps to take to improve the satisfaction of their constituents. Ascribe’s suite of software solutions does just this, simplifying and shortening analysis time so organizations can focus on what needs to be improved, and then see better results faster.

9/16/19

Read more

.jpeg)

Text Analytics & AI

Verbatim Analysis Software - Easily Analyze Open-Ended Questions

Do you want to stay close to your customers? Of course you do!

Improving the customer experience is central to any business. More broadly, any organization needs to stay in touch with its constituents, be they customers, employees, clients, or voters.

In today’s world, getting your hands on the voice of the customer isn’t difficult. Most companies have more comments from their customers than they know what to do with. These can come from internal sources, such as

- E-mails to your support desk.

- Transcriptions from your call center.

- Messages to your customer service staff.

- Notes in your CRM system.

- Comments from website visitors.

For most companies, the problem isn’t lack of feedback. It’s the sheer volume of it. You might have thousands or even hundreds of thousands of new comments from your customers.

The question is, “How do you make sense of it?”

Traditional Survey Research

Traditional survey market research gives us some clues. Market research firms have been using surveys for years to guide their clients to improve customer satisfaction.

They have developed very powerful techniques to analyze comments from customers and turn them into actionable insights. Let’s take a look at how they do it to see whether we can come up with some ways to take our voice of the customer data and find new insights to improve your company.

How Survey Market Researchers Use Verbatim Analysis Software To Deal With Open-Ends

A survey has two fundamental question types: closed-end and open-end. A closed-end question is one where you know the possible set of answers in advance, such as your gender or the state you live in.

When a respondent provides a free form text response to a question it is an open-end: you don’t know just what the respondent is going to say. That’s the beauty of open-ends. They let our customers tell us new things you didn’t know to ask.

Survey researchers call the open-end responses verbatims or text responses. To analyze the responses the researcher has them coded. Humans trained in verbatim coding read the responses and invent a set of tags or codes that capture the important concepts from the verbatims.

In a survey about a new juice beverage the codes might look something like:

- Likes

- Likes flavor

- Likes color

- Likes package

- Dislikes

- Too sweet

- Bad color

- Hard to open

Human coders read each verbatim and tag it with the appropriate codes. When verbatim coding is completed, we can use the data to pose questions like

- What percentage of the respondents like the flavor?

- What is the proportion of overall likes to dislikes?

And if you know other things about the respondents, such as their gender, you can ask

- Do a greater percentage of men or women like the flavor?

The researcher would say that you have turned the qualitative information (verbatims) into quantitative information (codes).

How Can Verbatim Coding Work for You?

The technique of verbatim coding is used to analyze comments from customers, but it has its drawbacks. Verbatim coding is expensive and time consuming. It’s most appropriate when you have high quality data and want the best possible analysis accuracy.

In that circumstance, you can either:

- Have the verbatims coded by a company specializing in the technique, or

- License software so that you can code the verbatims yourself.

Verbatim analysis software, like Ascribe Coder, is used by leading market research companies around the globe to code verbatims. This type of software increases productivity dramatically when compared to using more manual approaches to coding – like working in Excel.

Returning attention to our pile of customer comments, we can see that it would be great to get the benefits of verbatim coding without the cost. It would be great to leverage the computer to limit the cost and timing, with less involvement from human coders.

That’s where text analytics comes in.

Using Text Analytics To Analyze Open Ends

Working with customer comments, text analytics can tell us several things

- What are my customers talking about? (These are topics.)

- What customer feedback am I getting about each topic? (These are expressions about topics.)

- How do they feel about these things? (These are sentiment ratings.)

If you were running a gym, you might get this comment:

- I really like the workout equipment, but the showers are dirty.

Text analysis identifies the topics, expressions and sentiment in the comment:

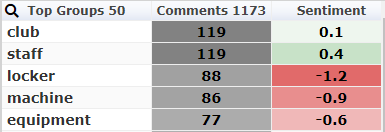

If we run a few thousand comments about our gym through text analytics, we might get results like this:

These are the things your members are talking about most frequently.

Each topic has an associated frequency (the number of times the topic came up) and a sentiment rating.

The sentiment rating for this analysis ranges from -2 as strong negative to +2 as strong positive. The sentiment scores are the averages for each topic.

Data Analysis: Drilling Into the Data

A good text analytics tool presents much more information than the simple table above. It will let you drill into the data, so you can see exactly what your customers are saying about the topics.

It should also let you use other information about your customers. For example, if you know the gender of each member, you can look at how the women’s opinions of the showers differ from the men’s.

Text Analytics Uncovers Important Insights For Your Business

With your text analytics solution, you can see immediately that your guests are generally positive about the staff. But you seem to have a problem with the lockers and the equipment. This lets you resolve the problem quickly – before any other customers have a bad experience.

Find Out Whether Verbatim Analysis Software Or Text Analytics Is Right For Your Business

Verbatim coding is a very well-developed part of traditional survey research. The coders who lead the analysis are experts in verbatim coding and the verbatim analysis software and play an important role in overseeing the analysis and the results. Verbatim coding produces very high accuracy, but it can also be expensive and time consuming. Text analytics also analyzes open ends, relying more on the computer to analyze the open end comments to uncover insights. While results are not quite as accurate, it does so at a fraction of the time and cost.Ascribe provides both traditional verbatim coding with Coder and advanced text analytics with CX Inspector. To learn more about whether Verbatim Coding or Text Analytics is right for your business, contact us or schedule a demo today.

7/23/19

Read more

Text Analytics & AI

Ascribe Regular Expressions

Ascribe allows the use of regular expressions in many areas, including codes in a codebook and for various search operations. The regular expressions in Ascribe include powerful features not found in standard regular expressions. In this article we will explain regular expressions and the extensions available in Ascribe.

Regular Expressions

A regular expression is a pattern used to match text. Regular expressions patterns are themselves text, but with certain special features that give flexibility in text matching.In most cases, a character in a regular expression simply matches that same character in the text being searched.The regular expressionlovematches the same four characters in the sentence:I loved the food and service.We will write:love ⇒ I loved the food and service.To mean “the regular expression ‘love’ matches the underlined characters in this text”.Here are a few other examples:e ⇒ I loved the food and service.d a ⇒ I loved the food and service.b ⇒ Big Bad John.Note in the last example that in Ascribe regular expressions are case insensitive. The character ‘b’ matches ‘b’ or ‘B’, and the character ‘B’ also matches ‘b’ and ‘B’.So far regular expressions don’t seem to do anything beyond plain text matching. But not all characters in a regular expression simply match themselves. Some characters are special. We will run through some of the special characters you will use most often.

The . (dot or period) character

A dot matches any character:e. ⇒ I loved the food and service.To be precise, the dot matches any character except a newline character, but that will rarely matter to you in practice.

The ? (question mark) character

A question mark following a normal character means that character is optional. The pattern will match whether the character before the question mark is in the text or not.dogs? ⇒ A dog is a great pet. I love dogs!

Character classes

You can make a pattern to match any of a set of characters using character classes. You put the characters to match in square brackets:cott[oeu]n ⇒ Cotton shirts, cotten pants, cottun blouseBoth the question mark and character classes are great when trying to match common spelling errors. They can be particularly useful when creating regular expressions to match brand mentions:budw[ei][ei]?ser ⇒ Budweiser, Budwieser, Budwiser, BudweserNote that the question mark following a character class means that the character class is optional.Some character classes are used so often that there are shorthand notations for them. These begin with a backslash character.The \w character class matches any word character, meaning the letters of the alphabet, digits, and the _ (underscore) character. It does not match hyphens, apostrophes, or other punctuation characters:t\we ⇒ The tee time is 2:00.The backslash character can also be used to change a special character such as ? into a normal character to match:dogs\? ⇒ A dog is a great pet. Do you have any dogs? I love dogs!

Quantifiers

The quantifiers * and + mean that the preceding expression can repeat. The * means the expression can repeat zero or more times, and the + means the preceding expression can repeat one or more times. So * means the preceding expression is optional, but if present it can repeat any number of times. While occasionally useful following a normal character, quantifiers are more often used following character classes. To match a whole word, we can write:\w+ ⇒ I don’t have a cat.Note that the apostrophe is not matched.

Anchors

Anchors don’t match any characters in the text, but match special locations in the text. The most important of these for use with Ascribe is \b, which matches a word boundary. This is useful for searching for whole words.\bday\b ⇒ Today is the Winter solstice. Each day gets longer, with more hours of daylight.Combined with \w* we can find words that start with “day”:\bday\w*\b ⇒ Today is the Winter solstice. Each day gets longer, with more hours of daylight.or words that contain “day”:\b\w*day\w*\b ⇒ Today is the Winter solstice. Each day gets longer, with more hours of daylight.Two other useful anchors are ^ and $, which match the start and end of the text respectively.

Alternation

The vertical bar character | matches the expressions on either side of it. You can think of this as an OR operation. Here is a pattern to match either the word “staff” or one starting with “perso”:\bstaff\b|\bperso\w+\b ⇒ The staff was friendly. Your support personnel are great!

Regular expression reference

This is just a glimpse into the subject of regular expressions. There are books written on the subject, but to make effective use of regular expressions in Ascribe you don’t need to dig that deep. You can find lots of information about regular expressions on the web. Here is one nice tutorial site:RegexOneThe definitive reference for the regular expressions supported in Ascribe is found here:Regular Expression LanguageYou can also download a quick reference file:Regular Expression Language - Quick Reference

Ascribe Extended Regular Expressions

Ascribe adds a few additional operators to standard regular expressions. These are used to tidy up searching for whole words, and to add more powerful logical operations.

Word matching

As we have mentioned, you can use \b to find whole words:\bstaff\b|\bperso\w+\b ⇒ The staff was friendly. Your support personnel are great!But those \b’s and \w's make things hard to read. Ascribe allows you to use these notations instead:NotationStandard regex equivalentMeaning<(\bStart of word>\b)End of word<<(\b\w*Word containing ...>>\w*\b)... to end of wordWe can now write:<as> (the word “as”)<as>> (a word starting with “as”)<<as> (a word ending with “as”)<<as>> (a word containing “as”)Examples:<staff>|<perso>> ⇒ The staff was friendly. Your support personnel are great!<bud> ⇒ Bud, Bud light, Budweiser, Budwieser<bud>> ⇒ Bud, Bud light, Budweiser, Budwieser<<ser> ⇒ Bud, Bud light, Budweiser, Budwieser<<w[ei]>> ⇒ Bud, Bud light, Budweiser, BudwieserIf you look in the table above, you will note that each of the standard regular expression equivalents have a parenthesis in them. Yes, you can use parentheses in regular expressions, much as in algebraic formulas. It’s an error in the regular expression to have a mismatched parenthesis. So, the pattern:<asis not legal. The implied starting parenthesis has no matching closing parenthesis. This forces you to use these notations in pairs, which is the intended usage.The use of these angle brackets does not add any power to regular expressions, they just make it easier to read your expressions. The logical operators I describe next do add significant new power.

Logical operators

We have seen that it is easy to search for one word or another:<staff>|<personnel>matches either of these words. What if we want to search for text that contains both the words “cancel” and “subscription”? This is not supported in standard regular expressions, but it is supported in Ascribe. We can write<cancel>&&<subscription>This matches text that contains both words, in either order. You can read && as “AND”.We can also search for text that contains one word and does not contain another word. This expression:<bud>>&~<light>|<lite>matches text that contains a word starting with “bud” but does not contain “lite” or “light”. You can read &~ as “AND NOT”.The && and &~ operators must be surrounded on either side by valid regular expressions, except that the &~ operator may begin the pattern. We can rewrite the last example as:&~<light>|<lite>&&<bud>>which means exactly the same thing.Pay attention to the fact that these logical operators require that the expressions on either side are valid on their own. This pattern is illegal:(<cancel>&&<subscription>)because neither of the expressions(<cancel><subscription>)are legal when considered independently. There are mismatched parentheses in each.

Pattern prefixes

There are two prefixes you can use that change the meaning of the match pattern that follows them. These must be the first character of the pattern. The prefixes are:*Plain text matching>Standard regular expression matching, without the Ascribe extensionsThese prefixes are useful when you are trying to match one of the special characters in regular expressions. Suppose we really want to match “why?”. Without using a prefix gives:why? ⇒ What are you doing and why? Or why not?But with the * prefix we can match the intended word only (with the question mark):*why? ⇒ What are you doing and why? Or why not?The > prefix is useful if you are searching for an angle bracket or one of the Ascribe logical operators:>\d+ < \d+ ⇒ The algebraic equation 22 < 25 is true.It helps to know that \d matches any digit.

Summary

Regular expressions are a powerful feature of Ascribe. Spending a little time getting used to them will pay off as you use Ascribe. The Ascribe extensions to regular expressions make searching for words easier, and support logical AND and NOT operations.

3/18/19

Read more

Text Analytics & AI

Using Class Rules in an Ascribe Rule Set

Class rules allow you to share code across rules, and even across Rule Sets. Using Class rules can help you organize your code and make it easier to maintain and reuse.

Introduction to Classes

As I wrote in Authoring Ascribe Rule Sets, you write rules using JScript, a superset of ECMA 3 JavaScript. An important feature of JScript is the introduction of classes. Classes are the foundation of traditional object-oriented programming.Classes are defined with the keyword class. They can contain properties (technically fields) and methods. Here is an example of a class definition:class Animal {

// A property:

var age: int = 2;

// A method:

function GetName() {

return "Giraffe";

}

}Once you have defined a class you can use it in another rule:var animal = new Animal(); // create an instance of Animal

var name = animal.GetName(); // get its name

var age = animal.age; // get its age

f.println("name:", name); // name: Giraffe

f.println("age:", age); // age: 2I won’t try to cover the topic of object-oriented programming and the details of the use of classes. You can read up on the subject in the JScript documentation: Creating Your Own Classes. I will cover two of the important use cases for classes: code reuse and performance.

Code Reuse

In my post Using Taxonomies in Ascribe Rule Sets I described how to use taxonomies in a Rule Set. Suppose that we want to use a taxonomy in more than one rule. One way to do this would be to copy the taxonomy code from one rule and paste it into another. This has obvious problems. If we want to make a correction to the taxonomy, we would have to make the same correction in all rules that use the taxonomy. It also makes our rules longer and harder to read.Let’s suppose that we are interested in knowing whether the topic or expression of a finding contains a sensitive word. We might use this code:var taxonomy = new Taxonomy();

// Add a list of sensitive words

// We don’t care about the group text, so we use an empty string

taxonomy.AddGroupAndSynonyms("", "<sue>", "<lawyer>", "<suspend>", "<cancel>");

if (taxonomy.IsMatch(f.t)|| taxonomy.IsMatch(f.e)) {

// We found a sensitive word, take some action here…

}We might find this logic useful in several rules in our Rule Set. That makes it a candidate for a Class rule. We can make a Class rule:class Sensitive {

var taxonomy = new Taxonomy();

// class constructor

function Sensitive() {

taxonomy.AddGroupAndSynonyms("", "<sue>", "<lawyer>", "<suspend>", "<cancel>");

}

// Return true if text contains a sensitive word

function Is(text: String) {

return taxonomy.IsMatch(text);

}

}This class has a constructor that sets up our taxonomy, and a method Is that returns true if the text passed to it contains a sensitive word.We can now use this class in any rule in our Rule Set. Let’s use it to mark the topic if the expression contains a sensitive word. We can use this Modify Finding rule:// Mark topics where expression contains sensitive word

var sensitive = new Sensitive();

if (sensitive.Is(f.e)) {

f.t = "Sensitive: " + f.t;

}This rule will prepend “Sensitive: ” to the topic of any finding where the expression contains one of our sensitive words.

Performance

Looking at the last example above, you can see that we new up an instance of the Sensitive class every time the rule is invoked. This is OK for lightweight objects like the Sensitive class, but can lead to performance problems if the class contains more initialization code. We can improve our class by using the static keyword to create variables that are members of the class itself, rather than of the instance of the class. Here is a new implementation of the Sensitive class that uses this approach:class Sensitive2 {

static var taxonomy = new Taxonomy();

// class constructor

static Sensitive2 {

taxonomy.AddGroupAndSynonyms("", "<sue>", "<lawyer>", "<suspend>", "<cancel>");

}

// Return true if text contains a sensitive word

static function Is(text: String) {

return taxonomy.IsMatch(text);

}

}We have simply added the static keyword to the property and method of the class, and modified the constructor by adding the static keyword and removing the parentheses for the empty argument list. Static constructors may not have an argument list.We can now use this class in our Modify Finding rule in a way that is both more convenient and performant:// Mark topics where expression contains sensitive word

if (Sensitive2.Is(f.e)) {

f.t = "Sensitive: " + f.t;

}Note that we no longer create and instance of the class, but simply invoke the Is method on the class itself. The performance advantage comes from the fact that the static constructor will be called only once, regardless of how many times the rule is invoked.

Spelling Correction Example

We can build on this approach by creating a more complete example of a class that corrects many common English misspellings. Here is a class to correct English misspellings:// English spelling corrections

class English {

private static var taxonomy: Taxonomy;

private static function Add(groupSynonyms: String[]) {

if (groupSynonyms.length > 1) {

var group: Group = taxonomy.AddGroup(groupSynonyms[0]);

for (var i: int = 1; i < groupSynonyms.length; i++) {

group.AddRegex("<" + groupSynonyms[i] + ">");

}

}

}

public static function Correct(t: String) {

return taxonomy.Replace(t);

}

// static constructor

static English {

taxonomy = new Taxonomy();

Add(["a lot", "alot"]);

Add(["about a", "abouta"]);

Add(["about it", "aboutit"]);

// ... many more such lines ...

Add(["your", "yuor"]);

}

}This implementation also demonstrates the use of the public and private keywords to permit or deny outside access to the members of the class.We can use our new class in a Modify Response on Load rule very simply:f.r = English.Correct(f.r);

Testing Class Rules

Testing Class rules requires a bit more work than testing other rule types. This is because your Class rules do not necessarily operate on a single Finding like the other rule types do. So you need to add your own test code to test Class rules. You can add test code using this template:@if(@test)

class Tester {

function test(f: Finding) {

// your test code here...

return f; // You may also return an array of Finding objects

}

}

@endThe conditional compilation @test is set to true by the Rule Set compiler when testing rules in the rule edit dialog. It is set to false when the rule is actually used in an Inspection.The test code above will therefore be compiled only when testing the rule.When testing a Class rule Ascribe searched for a class named Tester with a method named test that accepts a Finding argument. If found it invokes the method with a Finding with properties as specified in the dialog. The Finding returned by the test method is displayed in the test results section of the dialog.Here is an implementation of the Sensitive2 class in our previous example, with test code:class Sensitive2 {

static var taxonomy = new Taxonomy();

// class constructor

static Sensitive2 {

taxonomy.AddGroupAndSynonyms("", "<sue>", "<lawyer>", "<suspend>", "<cancel>");

}

// Return true if text contains a sensitive word

static function Is(text: String) {

return taxonomy.IsMatch(text);

}

}

@if(@test)

class Tester {

function test(f: Finding) {

if (Sensitive2.Is(f.e)) {

f.t = "Sensitive: " + f.t;

}

return f;

}

}

@end

Sharing Class Rules Across Rule Sets

If we have developed an elaborate class such as our spelling corrector, we may well want to use it in more than one Rule Set. We can do so by placing the Class rule in a Rule Set with ID Global Classes. This ID is special and indicates that this Rule Set contains one or more Class rules that should be available to all Rule Sets. We recommend adopting a naming convention for classes defined in these Class rules, such as prefixing the word “Global” to each class name. This helps make it clear in rules that use these shared Class rules that they are defined in the Global Classes Rule Set.When using a Global Classes Rule Set be aware of certain pitfalls:

- The classes defined in the Global Classes Rule Set are imported into other Rule Sets only if the Global Classes Rule Set is enabled and not marked for deletion.

- You can easily break all your Rule Sets that use the shared classes by deleting or disabling the Global Classes Rule Set, or by introducing bad code into a class in this Rule Set.

Because problems in the Global Classes Rule Set will be shared by all Rule Sets that use it, we recommend carefully testing your class definitions in a separate Rule Set before adding the tested code to the Global Classes Rule Set.

Summary

Class rules can be used for code reuse and performance improvement in your Rule Sets. Although not covered in this article they also allow for introduction of user defined data types. There is a bit to learn about how to create Class rules, but the small investment of time will pay off if you plan to develop a significant collection of Rule Sets.

3/13/19

Read more

Text Analytics & AI

Using Taxonomies in Ascribe Rule Sets

You can create and use taxonomies in Ascribe Rule Sets to help with common text manipulation chores, such as cleaning comments.In this article I will explain how to use a taxonomy in a Rule Set. We will use the taxonomy to correct common misspellings of words that are important to the text analytics work we are doing. If you are new to Rule Sets you can learn about them in Introduction to Ascribe Rule Sets.A taxonomy contains a list of groups, each of which has a list of synonyms. The taxonomy can be used to find words or phrases and map them to the group. In our case the group will be the properly spelled word, and the synonyms the misspelled version of the group.Rule Sets do not have direct access to the taxonomies stored in Ascribe, the ones on the Taxonomies page in Ascribe. The Rule Set can however create and use its own taxonomies. These are for use only while the Rule Set is running, and are not stored on the Taxonomies page in Ascribe.

Setting up a Taxonomy

You create a Taxonomy object in a rule like so:var taxonomy = new Taxonomy();You can now add groups and synonyms to the taxonomy. To add a Group to the taxonomy we write:var group = taxonomy.AddGroup("technical");The variable group holds the new group, the text of which is “technical”. We can now add regular expression synonyms to the group for common misspellings of “technical”.group.AddRegex("tecnical");

group.AddRegex("technecal");We can improve this by adding the \b operator at the start and end of our regular expression patterns, to make sure that our regular expressions match only whole words:group.AddRegex("\btecnical\b");

group.AddRegex("\btechnecal\b");One of the nice things about using the Taxonomy object is that you can use the extensions to regular expressions provided by Ascribe. The \b operator makes the regular expression patterns hard to read. We can replace these with the < and > operators provided by Ascribe for start and end of word:group.AddRegex("<tecnical>");

group.AddRegex("<technecal>");And, if you are well up on your regular expressions, you realize that we could do this with a single regular expression pattern:group.AddRegex("<tecnical>|<technecal>");but we will continue with this simple example using two synonyms for purposes of this discussion.Because this pattern of “add group, then add synonyms to the group” is so common, the Taxonomy object supports a more compact syntax to add a group with any number of synonyms:taxonomy.AddGroupAndSynonyms("technical", "<tecnical>", "<technecal>");Here the first parameter is the group text, which may be followed by any number of regular expression patterns for the synonyms.

Using a Taxonomy

We can ask whether any synonym in the taxonomy matches a given piece of text using the IsMatch method of the Taxonomy object. For example, we may set up a taxonomy with synonyms for words or phrases we want to call out as alerts. One way to use this would be to inject findings into the text analytics results contain sensitive words. Here is an Add Finding from Finding rule that demonstrates this:var taxonomy = new Taxonomy();

// Add a list of sensitive words

// We don’t care about the group text, so we use an empty string

taxonomy.AddGroupAndSynonyms("", "<sue>", "<lawyer>", "<suspend>", "<cancel>");

if (taxonomy.IsMatch(f.t)|| taxonomy.IsMatch(f.e)) {

f.t = "Alert!";

return f;

}Here we add a new finding with the topic “Alert!” if the topic or expression contains one of the sensitive words we are looking for.We can also use this technique to remove comments that contain nonsense character sequences at load time. Here is a Modify Response on Load that will remove comments that contain popular nonsense character sequences for respondents using QWERTY keyboards:var taxonomy = new Taxonomy();

taxonomy.AddGroupAndSynonyms("", "asdf", "qwer", "jkl;");

if (taxonomy.IsMatch(f.r)) {

return null; // If nonsense found comment is not loaded

}Returning to our original desire to correct common misspellings when data are loaded, we can use the powerful Replace method of the Taxonomy object. Given a string of text, the Replace method will replace any text that matches a synonym in the taxonomy with the group text.Here is an example Modify Response on Load rule:var taxonomy = new Taxonomy();

taxonomy.AddGroupAndSynonyms("technical", "tech?n[ia]ca?l");

taxonomy.AddGroupAndSynonyms("support", "suport");

f.r = taxonomy.Replace(f.r);This rule will change the comment:I called tecnacl suport.to:I called technical support.

Performance Considerations

The examples in the last section create and populate a new Taxonomy object each time the rule is invoked. With small taxonomies such as found in the examples above this is just fine. But if the taxonomy is large this can slow down the performance of your Rule Set dramatically. A much better approach when using large taxonomies is to create a Class rule to hold the taxonomy. This can provide much better performance with the additional benefit of making the taxonomy available in all rules in the Rule Set. See my post Using Class Rules in an Ascribe Rule Set for more information.

Summary

Using a Taxonomy object in a Rule Set can simplify common tasks such as detection or replacement of certain words or phrases. Taxonomies have the added benefit of allowing the use of an extended regular expression syntax for word matching.

3/13/19

Read more

.svg)